News related to climate change aggregated daily by David Landskov. Link to original article is at bottom of post.

Sunday, December 31, 2017

Saturday, December 30, 2017

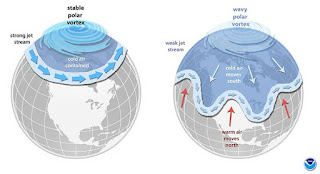

Ice Loss and the Polar Vortex: How a Warming Arctic Fuels Cold Snaps

The loss of sea ice may be weakening the polar vortex, allowing cold blasts to dip south from the Arctic, across North America, Europe, and Russia, a new study says.

When winter sets in, "polar vortex" becomes one of the most dreaded phrases in the Northern Hemisphere. It's enough to send shivers even before the first blast of bitter cold arrives.

New research shows that some northern regions have been getting hit with these extreme cold spells more frequently over the past four decades, even as the planet as a whole has warmed. While it may seem counterintuitive, the scientists believe these bitter cold snaps are connected to the warming of the Arctic and the effects that that warming is having on the winds of the stratospheric polar vortex, high above the Earth's surface.

Here's what scientists involved in the research think is happening: The evidence is clear that the Arctic has been warming faster than the rest of the planet. That warming is reducing the amount of Arctic sea ice, allowing more heat to escape from the ocean. The scientists think that the ocean energy that is being released is causing a weakening of the polar vortex winds over the Arctic, which normally keep cold air centered over the polar region. That weakening is then allowing cold polar air to slip southward more often.

Read more at Ice Loss and the Polar Vortex: How a Warming Arctic Fuels Cold Snaps

When winter sets in, "polar vortex" becomes one of the most dreaded phrases in the Northern Hemisphere. It's enough to send shivers even before the first blast of bitter cold arrives.

New research shows that some northern regions have been getting hit with these extreme cold spells more frequently over the past four decades, even as the planet as a whole has warmed. While it may seem counterintuitive, the scientists believe these bitter cold snaps are connected to the warming of the Arctic and the effects that that warming is having on the winds of the stratospheric polar vortex, high above the Earth's surface.

Here's what scientists involved in the research think is happening: The evidence is clear that the Arctic has been warming faster than the rest of the planet. That warming is reducing the amount of Arctic sea ice, allowing more heat to escape from the ocean. The scientists think that the ocean energy that is being released is causing a weakening of the polar vortex winds over the Arctic, which normally keep cold air centered over the polar region. That weakening is then allowing cold polar air to slip southward more often.

Read more at Ice Loss and the Polar Vortex: How a Warming Arctic Fuels Cold Snaps

Friday, December 29, 2017

Thursday, December 28, 2017

How to Get Wyoming Wind to California, and Cut 80% of U.S. Carbon Emissions

High-voltage direct-current transmission lines hold the key to slashing greenhouse gases.

The $3 billion TransWest Express Transmission Project is among a handful of proposed direct-current transmission lines in the United States, and one of the furthest along in the planning process. It underscores the huge promise of these high-capacity lines to unlock the full potential of renewable energy.

Transmission isn’t sexy (see our story art above). It’s basic infrastructure. Long wires and tall towers (see How Blockchain Could Give Us a Smarter Energy Grid).

But a growing body of studies conclude that building out a nationwide network of DC transmission lines could help enable renewable sources to supplant the majority of U.S. energy generation, offering perhaps the fastest, cheapest, and most efficient way of slashing greenhouse-gas emissions.

Developing these transmission lines, however, is incredibly time-consuming and expensive. The TransWest project was first proposed in 2005, but the developers will be lucky to secure their final permits and begin moving dirt at the end of next year.

There’s no single agency in charge of overseeing or ushering along such projects, leaving companies to navigate a thicket of overlapping federal, state, county, and city jurisdictions—every one of which must sign off for a project to begin. As a result, few such transmission lines ever get built.

A macro grid

Direct current, in which electric charges constantly flow in a single direction, is an old technology. It and alternating current were the subject of one of the world’s first technology standards battles, pitting Thomas Edison against his former protégé Nikola Tesla in the “War of the Currents” starting in the 1880s (see Edison’s Revenge: The Rise of DC Power).

AC won this early war, mainly because, thanks to the development of transformers, its voltage could be cranked up for long-distance transmission and stepped down for homes and businesses.

But a series of technological improvements have substantially increased the functionality of DC, opening up new ways of designing and interconnecting the electricity grid.

...

With direct-current lines, grid operators have more options for energy sources throughout the day, allowing them to tap into, say, cheap wind two states away during times of peak demand instead of turning to nearby but more expensive natural-gas plants for a few hours. The fact that regions can depend on energy from distant states for their peak demand also means they don’t have to build as much high-cost generation locally.

The point-to-point DC transmission scenario demonstrated the highest immediate economic return in the study, which will be published in the months ahead. But the macro-grid approach offers far greater redundancy and resilience, ensuring that the grid keeps operating if any one line goes down. It also makes it possible to build out far more renewable energy generation.

“The macro grid gives you a highway to all those loads and ties all those markets together,” says Dale Osborn, transmission planning technical director at the Midcontinent Independent System Operator (MISO), which first designed the system. “You get the most efficient, lowest-cost energy possible.”

Giant batteries

A national direct-current grid could also help lower emissions to as much as 80 percent below 1990 levels within 15 years, all with commercially available technology and without increasing the costs of electricity, according to an earlier study in Nature Climate Change.

Read more at How to Get Wyoming Wind to California, and Cut 80% of U.S. Carbon Emissions

The $3 billion TransWest Express Transmission Project is among a handful of proposed direct-current transmission lines in the United States, and one of the furthest along in the planning process. It underscores the huge promise of these high-capacity lines to unlock the full potential of renewable energy.

Transmission isn’t sexy (see our story art above). It’s basic infrastructure. Long wires and tall towers (see How Blockchain Could Give Us a Smarter Energy Grid).

But a growing body of studies conclude that building out a nationwide network of DC transmission lines could help enable renewable sources to supplant the majority of U.S. energy generation, offering perhaps the fastest, cheapest, and most efficient way of slashing greenhouse-gas emissions.

Developing these transmission lines, however, is incredibly time-consuming and expensive. The TransWest project was first proposed in 2005, but the developers will be lucky to secure their final permits and begin moving dirt at the end of next year.

There’s no single agency in charge of overseeing or ushering along such projects, leaving companies to navigate a thicket of overlapping federal, state, county, and city jurisdictions—every one of which must sign off for a project to begin. As a result, few such transmission lines ever get built.

A macro grid

Direct current, in which electric charges constantly flow in a single direction, is an old technology. It and alternating current were the subject of one of the world’s first technology standards battles, pitting Thomas Edison against his former protégé Nikola Tesla in the “War of the Currents” starting in the 1880s (see Edison’s Revenge: The Rise of DC Power).

AC won this early war, mainly because, thanks to the development of transformers, its voltage could be cranked up for long-distance transmission and stepped down for homes and businesses.

But a series of technological improvements have substantially increased the functionality of DC, opening up new ways of designing and interconnecting the electricity grid.

...

With direct-current lines, grid operators have more options for energy sources throughout the day, allowing them to tap into, say, cheap wind two states away during times of peak demand instead of turning to nearby but more expensive natural-gas plants for a few hours. The fact that regions can depend on energy from distant states for their peak demand also means they don’t have to build as much high-cost generation locally.

The point-to-point DC transmission scenario demonstrated the highest immediate economic return in the study, which will be published in the months ahead. But the macro-grid approach offers far greater redundancy and resilience, ensuring that the grid keeps operating if any one line goes down. It also makes it possible to build out far more renewable energy generation.

“The macro grid gives you a highway to all those loads and ties all those markets together,” says Dale Osborn, transmission planning technical director at the Midcontinent Independent System Operator (MISO), which first designed the system. “You get the most efficient, lowest-cost energy possible.”

Giant batteries

A national direct-current grid could also help lower emissions to as much as 80 percent below 1990 levels within 15 years, all with commercially available technology and without increasing the costs of electricity, according to an earlier study in Nature Climate Change.

Read more at How to Get Wyoming Wind to California, and Cut 80% of U.S. Carbon Emissions

Sea Level Rise Is Creeping into Coastal Cities. Saving Them Won’t Be Cheap.

Norfolk and Miami frequently see nuisance flooding now. The cost to protect them and other coastal cities in the future is rising with the tide.

To get a sense of how much it will cost the nation to save itself from rising seas over the next 50 years, consider Norfolk, Virginia.

In November the Army Corps released a proposal for protecting the city from coastal flooding that would cost $1.8 billion. Some experts consider the estimate low. And it doesn't include the Navy's largest base, which lies within city limits and likely needs at least another $1 billion in construction.

Then consider the costs to protect Boston, New York, Baltimore, Miami, Tampa, New Orleans, Houston, and the more than 3,000 miles of coastline in between.

Rising seas driven by climate change are flooding the nation's coasts now. The problem will get worse over the next 50 years, but the United States has barely begun to consider what's needed and hasn't grappled with the costs or who will pay. Many decisions are left to state and local governments, particularly now that the federal government under President Donald Trump has halted action to mitigate climate change and reversed nascent federal efforts to adapt to its effects.

Build Smarter, Move Away from the Risk

Moore said the country faces two main tasks: First, we have to stop building in risky, flood prone areas on the coast. Then we need to find ways to move people who already live there. One 2010 study estimated that 8.4 million Americans live in high-risk coastal flood areas.

Currently, federal policies including the National Flood Insurance Program are designed to encourage people to rebuild where they are, even if their properties are likely to flood again.

"What we do now is we basically wait for a disaster to happen and a massive disruption to occur and then we scramble around to get people back to where they were," Moore said.

Read more at Sea Level Rise Is Creeping into Coastal Cities. Saving Them Won’t Be Cheap.

To get a sense of how much it will cost the nation to save itself from rising seas over the next 50 years, consider Norfolk, Virginia.

In November the Army Corps released a proposal for protecting the city from coastal flooding that would cost $1.8 billion. Some experts consider the estimate low. And it doesn't include the Navy's largest base, which lies within city limits and likely needs at least another $1 billion in construction.

Then consider the costs to protect Boston, New York, Baltimore, Miami, Tampa, New Orleans, Houston, and the more than 3,000 miles of coastline in between.

Rising seas driven by climate change are flooding the nation's coasts now. The problem will get worse over the next 50 years, but the United States has barely begun to consider what's needed and hasn't grappled with the costs or who will pay. Many decisions are left to state and local governments, particularly now that the federal government under President Donald Trump has halted action to mitigate climate change and reversed nascent federal efforts to adapt to its effects.

- Globally, seas have risen about 7 to 8 inches on average since 1900, with about 3 inches of that coming since 1993. They're very likely to rise at least 0.5-1.2 feet by 2050 and 1-4.3 feet by 2100, and a rise of more than 8 feet by century's end is possible, according to a U.S. climate science report released this year. Because of currents and geology, relative sea level rise is likely to be higher than average in the U.S. Northeast and western Gulf Coast.

- By the time the waters rise 14 inches, what's now a once-in-five-years coastal flood will come five times a year, a recent government study determined. By 2060 the number of coastal communities facing chronic flooding from rising seas could double to about 180, even if we rapidly cut emissions, according to a study by the Union of Concerned Scientists. If we don't slash emissions, that number could be 360.

- The costs from this inundation will be tremendous. One study, published in the journal Science in June, found that rising seas and more powerful tropical storms will cost the nation an additional 0.5 percent of GDP annually by 2100. Another, by the real estate firm Zillow, estimated that a rise of 6 feet by 2100 would inundate nearly 1.9 million homes worth a combined $882 billion.

- Estimating what it would cost to avoid some of this is trickier. It will certainly be expensive—look no further than Norfolk's $1.8 billion—but some research indicates it may be less costly than failing to act. An analysis by NRDC found that buying out low- and middle-income owners of single-family homes that repeatedly flood could save the National Flood Insurance Program between $20 billion and $80 billion by 2100.

- The Trump administration, however, has revoked or withdrawn at least six federal programs or orders intended to help make the nation's infrastructure more resilient to rising seas and climate change. That includes an executive order signed by President Barack Obama in 2015 that expanded the federal floodplain to account for climate change projections and limited or controlled any federal infrastructure spending within that zone. Trump revoked the order in August. Trump also disbanded a panel that was trying to help cities adapt to climate change.

Build Smarter, Move Away from the Risk

Moore said the country faces two main tasks: First, we have to stop building in risky, flood prone areas on the coast. Then we need to find ways to move people who already live there. One 2010 study estimated that 8.4 million Americans live in high-risk coastal flood areas.

Currently, federal policies including the National Flood Insurance Program are designed to encourage people to rebuild where they are, even if their properties are likely to flood again.

"What we do now is we basically wait for a disaster to happen and a massive disruption to occur and then we scramble around to get people back to where they were," Moore said.

Read more at Sea Level Rise Is Creeping into Coastal Cities. Saving Them Won’t Be Cheap.

‘Build Back Better’ in Puerto Rico — Microgrids Treated Shoddily - by Mahesh Bhave

This month, a steering committee constituted in the wake of Hurricane Maria that devastated Puerto Rico wrote a report, "Build Back Better: Reimagining and Strengthening the Power Grid of Puerto Rico," for Gov. Andrew Cuomo, New York, Gov. Ricardo Rosselló, Puerto Rico, and William Long, Administrator of the U.S. Federal Emergency Management Agency.

Following the hurricane, many commentators (and I) wrote to say: Puerto Rico should deploy microgrids. I was anxious to see what the report recommended regarding microgrids. In the interim, the Puerto Rico Energy Commission on Nov. 10 issued a Resolution and Order that asked fundamental and important questions about grid restoration, including microgrids, and sought public comments. By early December, the Commission had received >50 responses.

Unfortunately, the Build Back Better report is disappointing. While it gives a good overview of the electricity infrastructure damage post Hurricane Maria, and identifies what is needed for hardened, resilient electricity supply, the analysis has inaccuracies or mischaracterizations relating to microgrids.

The Steering Committee has no representation from engineering or technology companies with experience in the microgrids space. Where is Tesla, where is Sonnen, and a host of others who offered products and services in the immediate aftermath of the hurricane? The committee has no broadband expertise represented either, says EPB in Chattanooga, TN, which, as a municipal electric utility, has expanded into gigabit broadband infrastructure.

Puerto Rico’s future electricity solutions are held back due to antiquated utility mindsets on the steering committee. Microgrids in Puerto Rico can and should be deployed immediately in the island’s public interest.

Read more at ‘Build Back Better’ in Puerto Rico — Microgrids Treated Shoddily

Following the hurricane, many commentators (and I) wrote to say: Puerto Rico should deploy microgrids. I was anxious to see what the report recommended regarding microgrids. In the interim, the Puerto Rico Energy Commission on Nov. 10 issued a Resolution and Order that asked fundamental and important questions about grid restoration, including microgrids, and sought public comments. By early December, the Commission had received >50 responses.

Unfortunately, the Build Back Better report is disappointing. While it gives a good overview of the electricity infrastructure damage post Hurricane Maria, and identifies what is needed for hardened, resilient electricity supply, the analysis has inaccuracies or mischaracterizations relating to microgrids.

- Timelines are absurd: To quote, “Investments in microgrids would occur strategically over a five to ten-year period, …” Ten years?! This challenges credulity. The fact is: Microgrids can be deployed now. The report further notes, “The Active DER [that is, Distributed Energy Resources that interact with the grid] cannot be fully implemented until requisite communications and control systems are installed - likely three to five years out” [highlights, italics added]. Again, the untenable five year out projection! More, why do microgrids need to interact with the rest of the grid? They can be standalone, or interact among each other, and work as a cluster — as a “federation of microgrids.” In fact, the report recognizes this possibility of standalone microgrids in another section of the report, as discussed below.

- Standalone microgrids are recognized but discounted: The report notes, “For some remote areas of the island, it may be feasible to more fully isolate these communities and design them to operate as separate and discrete grids. The [Working Group] recommends the consideration of feasibility studies and stakeholder discussions with local leaders and interested third parties to determine if investments in permanent disconnection from the main [Puerto Rico Electric Power Authority (PREPA0] grid are in the public interest, provide more cost-effective resiliency from natural disasters, and provide adequate service quality and reliability.” This situation should have been explored more thoroughly in the report.

- Microgrids are poorly defined, characterized: The report’s definition of the microgrid is flawed, “A microgrid is a specific section of the electric grid …” Not necessarily. The microgrid can work as a self-sufficient, independent system, as happens with island microgrids, as recognized by the working group in B above. Further, the report states, a microgrid … “has the capability of “islanding” itself from the rest of the electric grid and operate in isolation for hours or even days at a time …” This is true. But this following is not, “… while most of the year they retain connection to the centralized grid” [highlights, italics added] Microgrids may retain connection with the grid only as an option.

- Internet impact is missing: The report should have addressed the state of the Internet infrastructure following Hurricane Maria. This is because the Internet can serve as the substrate for all intelligent control and communications for managing microgrids, especially since the island has a robust Giganet Island Plan, and PREPA’s sister agency, PREPA Networks, has extensive fiber-optic infrastructure. To quote the report’s position, … “the communications and controls infrastructure required to actively manage DER [Distributed Energy Resources, that is microgrids]” in a centralized way, by upgrades to the centralized grid, is unnecessary presumption. Indeed, the internet is the “smart” grid!

The Steering Committee has no representation from engineering or technology companies with experience in the microgrids space. Where is Tesla, where is Sonnen, and a host of others who offered products and services in the immediate aftermath of the hurricane? The committee has no broadband expertise represented either, says EPB in Chattanooga, TN, which, as a municipal electric utility, has expanded into gigabit broadband infrastructure.

Puerto Rico’s future electricity solutions are held back due to antiquated utility mindsets on the steering committee. Microgrids in Puerto Rico can and should be deployed immediately in the island’s public interest.

Read more at ‘Build Back Better’ in Puerto Rico — Microgrids Treated Shoddily

Polar Ice Is Disappearing, Setting Off Climate Alarms

The short-term consequences of Arctic (and Antarctic) warming may already be felt in other latitudes. The long-term threat to coastlines is becoming even more dire.

Turns out, when you heat up ice, it melts. And with 2017 likely going down as one of the warmest years on record worldwide, this year's climate change signal was amplified at the Earth's poles.

There, decades-old predictions of intense warming have been coming true. The ice-covered poles, both north and south, continue to change at a breathtaking pace, with profound long-term consequences, according to the scientists who study them closely.

2017 Year in Review Series

And the consequences are destined to spill over into other parts of the globe, through changing atmospheric patterns, sea currents and feedback loops of ever intensifying melting.

The past year may not have broken annual records, but it provided ample evidence of where long-term trends are heading. "Even though we're not setting records every year—and we don't expect to because of natural variability—we're not any where close to the averages we saw in the 1980s, 1990s and before," said Walt Meier, a senior researcher at the National Snow and Ice Data Center.

When the Jet Stream Gets Loopy

The consequences are profound.

The modern weather system has been defined by cold poles and warmer mid-latitudes. Along the boundary of the regions, where the cold air meets the warm, you'll find bands of strong wind, known as the jet stream.

The jet stream typically behaves in expected or at least understood ways, but every now and then something wacky happens. It dips, it wobbles, and suddenly the northeast finds itself in the chilly embrace of a polar vortex; or California finds itself in yet another drought; or Seattle is doused in days of rain.

"It's basically extreme weather when you get that loopy jet stream," said Meier.

A growing body of science—some of which was recently published—is finding that as there are more years with historically low sea ice levels, there is an associated uptick in wobbly jet streams.

Read more at Polar Ice Is Disappearing, Setting Off Climate Alarms

Turns out, when you heat up ice, it melts. And with 2017 likely going down as one of the warmest years on record worldwide, this year's climate change signal was amplified at the Earth's poles.

There, decades-old predictions of intense warming have been coming true. The ice-covered poles, both north and south, continue to change at a breathtaking pace, with profound long-term consequences, according to the scientists who study them closely.

2017 Year in Review Series

And the consequences are destined to spill over into other parts of the globe, through changing atmospheric patterns, sea currents and feedback loops of ever intensifying melting.

The past year may not have broken annual records, but it provided ample evidence of where long-term trends are heading. "Even though we're not setting records every year—and we don't expect to because of natural variability—we're not any where close to the averages we saw in the 1980s, 1990s and before," said Walt Meier, a senior researcher at the National Snow and Ice Data Center.

- The area covered by sea ice in the Arctic hit record lows through the winter of 2017. In March, when the sea ice hit its largest extent of the year, it was lower than it ever had been in the nearly 40-year satellite record. The spring melt began a month earlier than normal, and though the pace of decline slowed some over the summer, the Bering and Chukchi Seas along Alaska's coast remained ice-free longer into the fall than ever before.

- In November NASA reported that two to four times as many coastal glaciers around Greenland are at risk of accelerated melting than previously thought. Greenland is losing an average of 260 billion tons of ice each year. In mid-September a surge of warm air caused a spike in surface melting in southern Greenland—one of the largest spikes to occur in September since 1978.

- In Antarctica the ice also recorded new lows. The annual low-point in ice coverage, which happened in early March, was the lowest on record. A few months later, in July, a trillion-ton section of Antarctica's Larsen C ice shelf broke off.

When the Jet Stream Gets Loopy

The consequences are profound.

The modern weather system has been defined by cold poles and warmer mid-latitudes. Along the boundary of the regions, where the cold air meets the warm, you'll find bands of strong wind, known as the jet stream.

"It's basically extreme weather when you get that loopy jet stream," said Meier.

A growing body of science—some of which was recently published—is finding that as there are more years with historically low sea ice levels, there is an associated uptick in wobbly jet streams.

Read more at Polar Ice Is Disappearing, Setting Off Climate Alarms

Wednesday, December 27, 2017

Utility-scale Solar Installations Can Avoid Using Farmland, Study Says

Across the U.S., the energy and agricultural industries are battling it out over whether to place solar panels or crops on large stretches of flat, sunny land. Now, a new study finds that developing solar energy arrays on alternative sites like buildings, lakes, and contaminated land would allow California to meet its 2025 electricity demands without sacrificing farmland.

The study’s authors, all from the University of California system, focused their analysis on California’s Central Valley. The Valley makes up 15 percent of California’s total land, and 3,250 of the Valley’s 20,000 square miles were classified as non-agricultural spaces viable for solar energy. But the authors calculate that development of solar power on just these lands would create enough electricity to power all of California 13 times over (with photovoltaic panels) or two times over (with concentrating solar power).

The Central Valley is a key agricultural region, supplying 8 percent of U.S. annual agricultural output, according to the U.S. Geological Survey (USGS). But the region’s immense energy demand and its year-round sunny skies also make it a prime location for solar energy development. The new study, published in the journal Environmental Science & Technology, finds a potential balance between agricultural concerns and the regional demand for solar energy.

“In the era of looming land scarcity, we need to look at underused spaces,” said Rebecca R. Hernandez, an assistant professor at UC Davis and co-author of the study. “This paper provides a menu for farmers, agricultural stakeholders, and energy developers to think about energy projects on spaces that don’t require us to lose prime agricultural and natural lands, which are becoming increasingly limited.”

The authors identified four types of non-agricultural spaces that could support solar development: the built environment, including buildings, rooftops, and parking lots; land with soil too salty for farming; reclaimed contaminated land; and reservoirs.

Read more at Utility-scale Solar Installations Can Avoid Using Farmland, Study Says

The study’s authors, all from the University of California system, focused their analysis on California’s Central Valley. The Valley makes up 15 percent of California’s total land, and 3,250 of the Valley’s 20,000 square miles were classified as non-agricultural spaces viable for solar energy. But the authors calculate that development of solar power on just these lands would create enough electricity to power all of California 13 times over (with photovoltaic panels) or two times over (with concentrating solar power).

The Central Valley is a key agricultural region, supplying 8 percent of U.S. annual agricultural output, according to the U.S. Geological Survey (USGS). But the region’s immense energy demand and its year-round sunny skies also make it a prime location for solar energy development. The new study, published in the journal Environmental Science & Technology, finds a potential balance between agricultural concerns and the regional demand for solar energy.

“In the era of looming land scarcity, we need to look at underused spaces,” said Rebecca R. Hernandez, an assistant professor at UC Davis and co-author of the study. “This paper provides a menu for farmers, agricultural stakeholders, and energy developers to think about energy projects on spaces that don’t require us to lose prime agricultural and natural lands, which are becoming increasingly limited.”

The authors identified four types of non-agricultural spaces that could support solar development: the built environment, including buildings, rooftops, and parking lots; land with soil too salty for farming; reclaimed contaminated land; and reservoirs.

Read more at Utility-scale Solar Installations Can Avoid Using Farmland, Study Says

Tuesday, December 26, 2017

Climate Change Is Happening Faster than Expected, and It’s More Extreme

New research suggests human-caused emissions will lead to bigger impacts on heat and extreme weather, and sooner than the IPCC warned just three years ago.

In the past year the scientific consensus shifted toward a grimmer and less uncertain picture of the risks posed by climate change.

When the UN's Intergovernmental Panel on Climate Change issued its 5th Climate Assessment in 2014, it formally declared that observed warming was "extremely likely" to be mostly caused by human activity.

2017 Year in Review Series

This year, a major scientific update from the United States Global Change Research Program put it more bluntly: "There is no convincing alternative explanation."

Other scientific authorities have issued similar assessments:

The Royal Society published a compendium of how the science has advanced, warning that it seems likelier that we've been underestimating the risks of warming than overestimating them.

The American Meteorological Society issued its annual study of extreme weather events and said that many of those it studied this year would not have been possible without the influence of human-caused greenhouse gas emissions.

The National Oceanic and Atmospheric Administration (NOAA) said recent melting of the Arctic was not moderating and was more intense than at any time in recorded history.

While 2017 may not have hit a global temperature record, it is running in second or third place, and on the heels of records set in 2015 and 2016. Talk of some kind of "hiatus" seems as old as disco music.

'A Deadly Tragedy in the Making'

Some of the strongest warnings in the Royal Society update came from health researchers, who said there hasn't been nearly enough done to protect millions of vulnerable people worldwide from the expected increase in heat waves.

"It's a deadly tragedy in the making, all the worse because the same experts are saying such heat waves are eminently survivable with adequate resources to protect people," said climate researcher Eric Wolff, lead author of the Royal Society update.

Read more at Climate Change Is Happening Faster than Expected, and It’s More Extreme

In the past year the scientific consensus shifted toward a grimmer and less uncertain picture of the risks posed by climate change.

When the UN's Intergovernmental Panel on Climate Change issued its 5th Climate Assessment in 2014, it formally declared that observed warming was "extremely likely" to be mostly caused by human activity.

2017 Year in Review Series

This year, a major scientific update from the United States Global Change Research Program put it more bluntly: "There is no convincing alternative explanation."

Other scientific authorities have issued similar assessments:

The Royal Society published a compendium of how the science has advanced, warning that it seems likelier that we've been underestimating the risks of warming than overestimating them.

The American Meteorological Society issued its annual study of extreme weather events and said that many of those it studied this year would not have been possible without the influence of human-caused greenhouse gas emissions.

The National Oceanic and Atmospheric Administration (NOAA) said recent melting of the Arctic was not moderating and was more intense than at any time in recorded history.

While 2017 may not have hit a global temperature record, it is running in second or third place, and on the heels of records set in 2015 and 2016. Talk of some kind of "hiatus" seems as old as disco music.

'A Deadly Tragedy in the Making'

Some of the strongest warnings in the Royal Society update came from health researchers, who said there hasn't been nearly enough done to protect millions of vulnerable people worldwide from the expected increase in heat waves.

"It's a deadly tragedy in the making, all the worse because the same experts are saying such heat waves are eminently survivable with adequate resources to protect people," said climate researcher Eric Wolff, lead author of the Royal Society update.

Read more at Climate Change Is Happening Faster than Expected, and It’s More Extreme

Trump’s Offshore Oil Rush a Disaster for Oceans and Climate - by Richard Steiner

Nowhere is President Trump’s historic assault on our natural environment more worrisome than his reckless push for increased offshore oil and gas drilling.

At a time when science says that to stabilize global climate two-thirds of all fossil fuel reserves must stay in the ground, Trump is instead pushing a huge increase in fossil fuel production, onshore and offshore. Expanded drilling, weakened agency oversight, relaxed safety regulations, and oil companies now with more available cash from tax cuts is a “perfect storm” for increased risk of climate disasters and oil spills.

If this dangerous offshore plan moves ahead, we can expect decades of more catastrophic oil spills, hurricanes, floods, droughts, and wildfires.

In addition to the oil and gas already in production offshore, the U.S. offshore seabed may hold another 90 billion barrels of oil and 400 trillion cubic feet of natural gas, or more. Burning this amount of fossil fuel would add over 50 billion tons of CO2 to the global atmosphere, a “carbon bomb” comparable to the Alberta tar sands. And much of this CO2 would be reabsorbed into seawater, increasing ocean acidification.

The administration’s offshore drilling plan would commit us to another several decades of carbon-intensive energy production, delay our transition to sustainable low-carbon energy, and guarantee future climate chaos. But Trump and friends see billions of dollars lying in the seabed, and ignore the inconvenient climate truth. This could be “game over” for efforts to control climate change.

Read more at Trump’s Offshore Oil Rush a Disaster for Oceans and Climate

At a time when science says that to stabilize global climate two-thirds of all fossil fuel reserves must stay in the ground, Trump is instead pushing a huge increase in fossil fuel production, onshore and offshore. Expanded drilling, weakened agency oversight, relaxed safety regulations, and oil companies now with more available cash from tax cuts is a “perfect storm” for increased risk of climate disasters and oil spills.

If this dangerous offshore plan moves ahead, we can expect decades of more catastrophic oil spills, hurricanes, floods, droughts, and wildfires.

In addition to the oil and gas already in production offshore, the U.S. offshore seabed may hold another 90 billion barrels of oil and 400 trillion cubic feet of natural gas, or more. Burning this amount of fossil fuel would add over 50 billion tons of CO2 to the global atmosphere, a “carbon bomb” comparable to the Alberta tar sands. And much of this CO2 would be reabsorbed into seawater, increasing ocean acidification.

The administration’s offshore drilling plan would commit us to another several decades of carbon-intensive energy production, delay our transition to sustainable low-carbon energy, and guarantee future climate chaos. But Trump and friends see billions of dollars lying in the seabed, and ignore the inconvenient climate truth. This could be “game over” for efforts to control climate change.

Read more at Trump’s Offshore Oil Rush a Disaster for Oceans and Climate

The World Should Go for Zero Emissions, Not Two Degrees - by Oliver Geden

Compared to temperature thresholds, targeting greenhouse gas neutrality is noticeably more precise, easier to evaluate, politically more likely to be attained, and ultimately more motivating too

Since the Intergovernmental Panel on Climate Change (IPCC) declines, with good reason, to deliver a scientific formula for fairly distributing mitigation obligations among individual states, every government is able to declare confidently that its national pledges are in line with global temperature targets. As it stands, mitigation efforts can only be critically evaluated at the global level. However, no single country can be made responsible for the looming breach of the 2 °C or 1.5 °C target.

Steering action

Compared to temperature thresholds, targeting greenhouse gas neutrality is noticeably more precise, easier to evaluate, politically more likely to be attained, and ultimately more motivating too. Since this goal directly tackles the actions perceived as problematic, their effectiveness at steering policy can be expected to be much greater than “1.5 °C” or “well below 2 °C”.

A zero emissions target shows the policymakers, the media and the public fairly precisely what needs to be done. If global greenhouse gas neutrality in the sense of Article 4 of the Paris Agreement is interpreted to mean that all signatories have to gradually reach “net zero” between 2050 and 2100, then they must all be measured against the same yardstick. Any differentiation between these obligations – for instance, between industrialized nations, emerging economies and developing countries – can only occur along the time axis. Under the “bottom-up” approach of the Paris Agreement, governments make that decision for themselves.

Each country’s emissions must first peak (which is already the case for 49 of them), then continually decrease and finally attain zero. Measured against this target, it is easy to make mitigation action transparent – not just of national governments, but of cities, economic sectors, and individual companies as well.

Whoever ignores the target will not be able to deceive others: it is relatively easy to ascertain whether the respective emissions are going up or down. Wherever greenhouse gas neutrality becomes the socially accepted norm, new fossil-fuel infrastructure would be very hard to justify.

A zero emissions vision could also kickstart a race to reach the zero line before others. Some countries have already taken up the challenge. Sweden, for instance, hopes to reach zero by 2045. The United Kingdom has at least declared its willingness to announce its zero emissions target soon.

Whoever ignores the target will not be able to deceive others: it is relatively easy to ascertain whether the respective emissions are going up or down

Obviously, even a zero emissions target is no guarantee that all emissions reduction measures will be implemented as planned. Given the perspective of several decades, such a guarantee cannot exist. Since greenhouse gas neutrality is primarily about setting a clear direction, rather than positing an imaginary border between “acceptable” and “dangerous” climate change (namely 2 °C), its attainability is not a question of either/or, but of sooner/later. It thus avoids definitive failure, which would have a demoralizing political effect.

But targeting zero emissions would provide clear and transparent directions for all relevant actors. It would bring out inconsistencies between talk, decisions and actions much more clearly than temperature objectives such as 2 °C or 1.5 °C can.

The UNFCCC should therefore give the target of greenhouse gas neutrality much more weight in future. It could start with the facilitative dialogue planned for 2018, whose rules are being set at the COP23 in Bonn. The dialogue is intended to boost countries’ ambitions and to lead to strengthened ‘nationally determined contributions’ under the Paris Agreement.

Read more at The World Should Go for Zero Emissions, Not Two Degrees

Since the Intergovernmental Panel on Climate Change (IPCC) declines, with good reason, to deliver a scientific formula for fairly distributing mitigation obligations among individual states, every government is able to declare confidently that its national pledges are in line with global temperature targets. As it stands, mitigation efforts can only be critically evaluated at the global level. However, no single country can be made responsible for the looming breach of the 2 °C or 1.5 °C target.

Steering action

Compared to temperature thresholds, targeting greenhouse gas neutrality is noticeably more precise, easier to evaluate, politically more likely to be attained, and ultimately more motivating too. Since this goal directly tackles the actions perceived as problematic, their effectiveness at steering policy can be expected to be much greater than “1.5 °C” or “well below 2 °C”.

A zero emissions target shows the policymakers, the media and the public fairly precisely what needs to be done. If global greenhouse gas neutrality in the sense of Article 4 of the Paris Agreement is interpreted to mean that all signatories have to gradually reach “net zero” between 2050 and 2100, then they must all be measured against the same yardstick. Any differentiation between these obligations – for instance, between industrialized nations, emerging economies and developing countries – can only occur along the time axis. Under the “bottom-up” approach of the Paris Agreement, governments make that decision for themselves.

Each country’s emissions must first peak (which is already the case for 49 of them), then continually decrease and finally attain zero. Measured against this target, it is easy to make mitigation action transparent – not just of national governments, but of cities, economic sectors, and individual companies as well.

Whoever ignores the target will not be able to deceive others: it is relatively easy to ascertain whether the respective emissions are going up or down. Wherever greenhouse gas neutrality becomes the socially accepted norm, new fossil-fuel infrastructure would be very hard to justify.

A zero emissions vision could also kickstart a race to reach the zero line before others. Some countries have already taken up the challenge. Sweden, for instance, hopes to reach zero by 2045. The United Kingdom has at least declared its willingness to announce its zero emissions target soon.

Whoever ignores the target will not be able to deceive others: it is relatively easy to ascertain whether the respective emissions are going up or down

Obviously, even a zero emissions target is no guarantee that all emissions reduction measures will be implemented as planned. Given the perspective of several decades, such a guarantee cannot exist. Since greenhouse gas neutrality is primarily about setting a clear direction, rather than positing an imaginary border between “acceptable” and “dangerous” climate change (namely 2 °C), its attainability is not a question of either/or, but of sooner/later. It thus avoids definitive failure, which would have a demoralizing political effect.

But targeting zero emissions would provide clear and transparent directions for all relevant actors. It would bring out inconsistencies between talk, decisions and actions much more clearly than temperature objectives such as 2 °C or 1.5 °C can.

The UNFCCC should therefore give the target of greenhouse gas neutrality much more weight in future. It could start with the facilitative dialogue planned for 2018, whose rules are being set at the COP23 in Bonn. The dialogue is intended to boost countries’ ambitions and to lead to strengthened ‘nationally determined contributions’ under the Paris Agreement.

Read more at The World Should Go for Zero Emissions, Not Two Degrees

The Netherlands Confronts a Carbon Dilemma: Sequester or Recycle?

Public opposition to sequestration will make it harder to reach the country’s carbon reduction goal.

As soon as the new Dutch government took office in October, it announced an aggressive target—to reduce carbon emissions by 49 percent by 2030. This will ultimately require the Netherlands to sequester 20 million metric tons of carbon dioxide per year—equivalent to the annual emissions produced by 4.5 coal-fired power plants.

Sequestering that much CO2 underground will be difficult, whether it’s captured directly from the flues of power stations and steel mills or extracted from the air. Currently, the Netherlands sequesters less than 10,000 metric tons of CO2 annually.

Gert Jan Kramer, a physicist at Utrecht University, says the government’s aims are “drastic” but possible. “The technology and the industrial capacity for storing underground tens of megatons [1 megaton = 1 million metric tons] of carbon dioxide is ready,” he says.

Underground natural gas reservoirs are already leakproof, and pumping CO2 into them while extracting gas would maintain their internal pressure, which would stabilize underground rock structure and prevent seismic activity. “We have investigated every event and consequence imaginable, and we’ve concluded that underground carbon storage is safe,” says Robert Hack, an engineering geologist at the University of Twente, in the Netherlands.

However, carbon sequestration projects have not fared well in Europe because of public opposition. More than 20 large-scale carbon capture and sequestration projects are now operational worldwide, but only two are based in Europe.

...

However, other scientists disagree with the idea that recycling CO2 to produce synthetic fuels can meaningfully reduce the amount of carbon in the atmosphere. One problem is that the same process that scrubs CO2 from the flue gases of a fossil-fuel power plant also reduces the plant’s electrical power output by up to 25 percent, says Hack. This is because that process requires a substantial amount of energy to heat, cool, and pump solvents that absorb CO2 from the flue gases.

Gunnar Luderer, a researcher at the Potsdam Institute for Climate Impact Research, in Germany, argues that synthetic fuel produced from CO2 for transportation is not really carbon neutral when captured from the flues of power plants. “You cannot have a second carbon capture from the emissions of a car or an airplane. In the end, it is fossil carbon that undergoes combustion twice,” he says.

Luderer agrees, however, that capturing carbon from the air and using it for purposes other than transportation changes the equation. Cement factories are known for their massive release of carbon into the atmosphere. Instead of capturing that carbon after the fact, it would make more sense to extract carbon from the air and use it to produce carbon fibers. These fibers are less corrosive than steel beams and require less concrete to cover. Using them in place of steel could reduce demand for concrete, and thereby cut emissions from its production. “Here, you would have a double benefit,” says Luderer.

Read more at The Netherlands Confronts a Carbon Dilemma: Sequester or Recycle?

As soon as the new Dutch government took office in October, it announced an aggressive target—to reduce carbon emissions by 49 percent by 2030. This will ultimately require the Netherlands to sequester 20 million metric tons of carbon dioxide per year—equivalent to the annual emissions produced by 4.5 coal-fired power plants.

Sequestering that much CO2 underground will be difficult, whether it’s captured directly from the flues of power stations and steel mills or extracted from the air. Currently, the Netherlands sequesters less than 10,000 metric tons of CO2 annually.

Gert Jan Kramer, a physicist at Utrecht University, says the government’s aims are “drastic” but possible. “The technology and the industrial capacity for storing underground tens of megatons [1 megaton = 1 million metric tons] of carbon dioxide is ready,” he says.

Underground natural gas reservoirs are already leakproof, and pumping CO2 into them while extracting gas would maintain their internal pressure, which would stabilize underground rock structure and prevent seismic activity. “We have investigated every event and consequence imaginable, and we’ve concluded that underground carbon storage is safe,” says Robert Hack, an engineering geologist at the University of Twente, in the Netherlands.

However, carbon sequestration projects have not fared well in Europe because of public opposition. More than 20 large-scale carbon capture and sequestration projects are now operational worldwide, but only two are based in Europe.

...

However, other scientists disagree with the idea that recycling CO2 to produce synthetic fuels can meaningfully reduce the amount of carbon in the atmosphere. One problem is that the same process that scrubs CO2 from the flue gases of a fossil-fuel power plant also reduces the plant’s electrical power output by up to 25 percent, says Hack. This is because that process requires a substantial amount of energy to heat, cool, and pump solvents that absorb CO2 from the flue gases.

Gunnar Luderer, a researcher at the Potsdam Institute for Climate Impact Research, in Germany, argues that synthetic fuel produced from CO2 for transportation is not really carbon neutral when captured from the flues of power plants. “You cannot have a second carbon capture from the emissions of a car or an airplane. In the end, it is fossil carbon that undergoes combustion twice,” he says.

Luderer agrees, however, that capturing carbon from the air and using it for purposes other than transportation changes the equation. Cement factories are known for their massive release of carbon into the atmosphere. Instead of capturing that carbon after the fact, it would make more sense to extract carbon from the air and use it to produce carbon fibers. These fibers are less corrosive than steel beams and require less concrete to cover. Using them in place of steel could reduce demand for concrete, and thereby cut emissions from its production. “Here, you would have a double benefit,” says Luderer.

Read more at The Netherlands Confronts a Carbon Dilemma: Sequester or Recycle?

China Is Building a Futuristic 'Forest City' with More Trees than People

The plan calls for terraced buildings with almost a million plants.

The world’s first “forest city” is under construction in China. The plan calls for terraced buildings with almost a million plants and 40,000 trees … growing not just on the ground, but on balconies and rooftops. In the architectural designs, the buildings are so covered with greenery that they appear to blend into the mountainous background.

Called Liuzhou Forest City, the green building project is intended to help provide homes for China’s rapidly growing population without creating more carbon pollution.

The new city is expected to house 30,000 people. So the design includes a train station, hospital, schools, and commercial areas. Solar and geothermal energy will be key sources of power.

The concept and design come from the firm of Italian architect and urban planner, Stefano Boeri. He says trees absorb carbon pollution and provide shade that reduces the need for energy-guzzling air conditioners. So he says planting urban forests is critical to slowing global warming.

Boeri: “It’s one of the main ways to deal with climate change.”

The futuristic city will set an example for how cities can grow and reduce carbon pollution.

Read more at China Is Building a Futuristic 'Forest City' with More Trees than People

The world’s first “forest city” is under construction in China. The plan calls for terraced buildings with almost a million plants and 40,000 trees … growing not just on the ground, but on balconies and rooftops. In the architectural designs, the buildings are so covered with greenery that they appear to blend into the mountainous background.

Called Liuzhou Forest City, the green building project is intended to help provide homes for China’s rapidly growing population without creating more carbon pollution.

The new city is expected to house 30,000 people. So the design includes a train station, hospital, schools, and commercial areas. Solar and geothermal energy will be key sources of power.

The concept and design come from the firm of Italian architect and urban planner, Stefano Boeri. He says trees absorb carbon pollution and provide shade that reduces the need for energy-guzzling air conditioners. So he says planting urban forests is critical to slowing global warming.

Boeri: “It’s one of the main ways to deal with climate change.”

The futuristic city will set an example for how cities can grow and reduce carbon pollution.

Read more at China Is Building a Futuristic 'Forest City' with More Trees than People

Timeline for Electric Vehicle Revolution (via Lower Battery Prices, Supercharging, Lower Battery Prices)

Everyone knows that electric vehicles (EVs) are going to replace internal combustion engine vehicles (ICEVs) in the long run. Many of us are excited about this key transition away from fossil fuels and hope that it comes sooner rather than later, yet are not sure exactly when the big breakthroughs in market share are going to happen. It is often stated that at battery prices of $100/kWh, EVs will successfully compete with ICEVs, but mainstream predictions about how and when this happens vary widely. In this in-depth article we are going to look in more detail at the figures relevant to different vehicle segments, estimate the most probable timeline for feature and price parity in these segments, and offer a counterpoint to the more conservative timelines that we see from both incumbents (OPEC) and progressives (BNEF) alike. We know the EV disruption is real, since it is already well underway in the premium sedan segment.

The bottom line is this: EVs will be better and cheaper than ICEVs at the value end of the biggest volume segments (small SUV and compact car) by 2022. At the higher-priced end of these segments, EVs will already be at parity by 2019. By 2024–2025, EVs will outcompete on both features and price in pretty much every vehicle segment (except rocket ships).

Read more at Timeline for Electric Vehicle Revolution (via Lower Battery Prices, Supercharging, Lower Battery Prices)

The bottom line is this: EVs will be better and cheaper than ICEVs at the value end of the biggest volume segments (small SUV and compact car) by 2022. At the higher-priced end of these segments, EVs will already be at parity by 2019. By 2024–2025, EVs will outcompete on both features and price in pretty much every vehicle segment (except rocket ships).

Read more at Timeline for Electric Vehicle Revolution (via Lower Battery Prices, Supercharging, Lower Battery Prices)

Monday, December 25, 2017

Sunday, December 24, 2017

Saturday, December 23, 2017

California Wildfire Becomes Largest on Record in the State

A sprawling Southern California wildfire that has been burning through rugged, drought-parched coastal terrain since Dec. 4 has become the largest on record in the state, state fire officials said on Friday.

The so-called Thomas fire has blazed through 273,400 acres (110,641 hectares), surpassing the previous record of the 2003 Cedar fire in San Diego County that scorched 273,246 acres and killed 15 people, they said.

The Thomas fire was 65 percent contained as of Friday evening and the natural spread of the blaze had been virtually halted days ago by fire crew, they said.

Incremental increases in burned acreage detected by daily aerial surveys since then have been largely due to controlled-burning operations conducted by firefighters to clear swaths of vegetation between the smoldering edges of the fire zone and populated areas.

The fire has destroyed over 1,000 structures as it has scorched coastal mountains, foothills, and canyons across Ventura and Santa Barbara counties northwest of Los Angeles, officials said.

Read more at California Wildfire Becomes Largest on Record in the State

The so-called Thomas fire has blazed through 273,400 acres (110,641 hectares), surpassing the previous record of the 2003 Cedar fire in San Diego County that scorched 273,246 acres and killed 15 people, they said.

The Thomas fire was 65 percent contained as of Friday evening and the natural spread of the blaze had been virtually halted days ago by fire crew, they said.

Incremental increases in burned acreage detected by daily aerial surveys since then have been largely due to controlled-burning operations conducted by firefighters to clear swaths of vegetation between the smoldering edges of the fire zone and populated areas.

The fire has destroyed over 1,000 structures as it has scorched coastal mountains, foothills, and canyons across Ventura and Santa Barbara counties northwest of Los Angeles, officials said.

Read more at California Wildfire Becomes Largest on Record in the State

Superfund Sites Are in Danger of Flooding, Putting Millions of Americans at Risk

An investigation by the Associated Press found that 327 of the most polluted sites in the country are vulnerable to flooding and sea-level rise spurred by climate change.

The 2 million people who live within a mile of these sites face a serious health threat if floodwaters carry hazardous materials into their homes or contaminate drinking water.

We already saw this happen in Houston when Hurricane Harvey’s torrential rains hit 13 Superfund sites. The San Jacinto River Waste Pits Superfund site, for example, leaked chemicals that can cause cancer and birth defects. The EPA estimates it will cost $115 million to clean up the site.

Low-income communities and people of color likely face the most risk from Superfund sites. “We place the things that are most dangerous in sacrifice zones, which in many instances are communities of color where we haven’t placed as much value on their lives,” said Mustafa Ali, who led the EPA’s environmental justice program before resigning this year.

Read more at Superfund Sites Are in Danger of Flooding, Putting Millions of Americans at Risk

The 2 million people who live within a mile of these sites face a serious health threat if floodwaters carry hazardous materials into their homes or contaminate drinking water.

We already saw this happen in Houston when Hurricane Harvey’s torrential rains hit 13 Superfund sites. The San Jacinto River Waste Pits Superfund site, for example, leaked chemicals that can cause cancer and birth defects. The EPA estimates it will cost $115 million to clean up the site.

Low-income communities and people of color likely face the most risk from Superfund sites. “We place the things that are most dangerous in sacrifice zones, which in many instances are communities of color where we haven’t placed as much value on their lives,” said Mustafa Ali, who led the EPA’s environmental justice program before resigning this year.

Read more at Superfund Sites Are in Danger of Flooding, Putting Millions of Americans at Risk

Friday, December 22, 2017

Will Canada’s Latest Boom in Tar Sands Oil Mean Another Boom for Oil-by-Rail?

Nothing seems able to derail the rise in Canadian tar sands oil production. Low prices, canceled pipelines, climate realities, a major oil company announcing it will no longer develop heavy oils, divestment, and now even refusals to insure tar sands pipelines have all certainly slowed production, but it is still poised for significant growth over the next several years.

In March an analyst for GMP FirstEnergy commented, “It's hard to imagine a scenario where oilsands production would go down.”

But with pipelines to U.S. refineries and ports running at or near capacity from Canada, it's hard to imagine all that heavy Canadian oil going anywhere without the help of the rail industry.

“The oilsands are witnessing unprecedented growth that we now peg at roughly 250,000 barrels per day in 2017 and 315,000 bpd in 2018, before downshifting to roughly 180,000 bpd in 2019,” says a new report from analyst Greg Pardy of RBC Dominion Securities.

However, much like shale oil in the U.S., increased production doesn’t necessarily mean increased profits. The Wall Street Journal recently reported that since 2007 investors have spent approximately a quarter trillion dollars more on shale production than those investments have generated.

Clearly the oil industry will keep pumping as much oil as they can even while losing large sums of money. And just like in the U.S., Canadian oil producers are facing significant economic challenges, which have caused some companies to get out of the business.

As a result of these challenges, forecasts for future tar sands production have fallen significantly. In 2013 the forecast for 2030 production was 6.7 million barrels per day. That has been revised down to 5.1 million barrels per day. While that is a sizable drop in expected future production, the industry will still be growing in the near future, and most of that oil will be exported to America.

One question currently up for debate is just how that oil will get to American refineries and ports.

Read more at Will Canada’s Latest Boom in Tar Sands Oil Mean Another Boom for Oil-by-Rail?

In March an analyst for GMP FirstEnergy commented, “It's hard to imagine a scenario where oilsands production would go down.”

But with pipelines to U.S. refineries and ports running at or near capacity from Canada, it's hard to imagine all that heavy Canadian oil going anywhere without the help of the rail industry.

“The oilsands are witnessing unprecedented growth that we now peg at roughly 250,000 barrels per day in 2017 and 315,000 bpd in 2018, before downshifting to roughly 180,000 bpd in 2019,” says a new report from analyst Greg Pardy of RBC Dominion Securities.

However, much like shale oil in the U.S., increased production doesn’t necessarily mean increased profits. The Wall Street Journal recently reported that since 2007 investors have spent approximately a quarter trillion dollars more on shale production than those investments have generated.

Clearly the oil industry will keep pumping as much oil as they can even while losing large sums of money. And just like in the U.S., Canadian oil producers are facing significant economic challenges, which have caused some companies to get out of the business.

As a result of these challenges, forecasts for future tar sands production have fallen significantly. In 2013 the forecast for 2030 production was 6.7 million barrels per day. That has been revised down to 5.1 million barrels per day. While that is a sizable drop in expected future production, the industry will still be growing in the near future, and most of that oil will be exported to America.

One question currently up for debate is just how that oil will get to American refineries and ports.

Read more at Will Canada’s Latest Boom in Tar Sands Oil Mean Another Boom for Oil-by-Rail?

Subscribe to:

Posts (Atom)