News related to climate change aggregated daily by David Landskov. Link to original article is at bottom of post.

Tuesday, June 30, 2015

Water Use Declining as Natural Gas Grows

As the U.S. has undergone a rapid and massive shift to natural gas from coal, one benefit has gone almost entirely overlooked: the amount of water needed to cool the nation’s power plants has dropped substantially.

The widespread adoption of hydraulic fracturing (fracking) technology has led to dramatically higher natural gas production in the U.S. since 2005. The resulting drop in natural gas prices, coinciding with new EPA air quality regulations for coal-fired power plants, has led to a surge in natural gas-fired electricity generation nationwide.

Between 2005 and 2012, coal’s share of electricity generation fell to 37 percent from 50 percent. Natural gas rose to 30 percent from 19 percent. Total electricity generation stayed roughly constant.

That shift has translated into big changes in the amount of water being withdrawn from lakes and rivers to cool power plants. And it’s an important shift as nationally, 38 percent of all water withdrawn is for power plants.

During the most recent 7-year period with reliable data, water use fell dramatically to 33 trillion gallons in 2012 from 52 trillion gallons in 2005. On average, the current natural gas power plants use four times less water per megawatt-hour generated than their coal-fired counterparts.

Water withdrawals for power generation dropped by more than 1.5 trillion gallons per year in Ohio, New York, and Illinois; 10 states had decreases of 1 trillion gallons or more. Electricity generated from natural gas increased 370 percent on average in those 10 states, with the largest absolute increases coming in Alabama and New York.

Some power plants are designed to be cooled by water withdrawn from a river, lake, or ocean and then returned to those waters. To protect fish and other wildlife from dramatic increases in water temperatures from the discharges, regulations limit how much the water can be warmed at the power plant before it is discharged. In hot and/or dry periods some power plants have been forced to reduce output, shut down completely, or seek exemptions from the regulations.

Water consumption in power generation refers to water withdrawn from a river, lake, or ocean but not returned to the water body from which it came. Evaporation from a cooling tower is one example. Nationally, freshwater consumed to cool power plants was about 1 trillion gallons in 2012. In contrast, freshwater withdrawals were 33 trillion gallons. Power plants without cooling towers account for most of the withdrawals. These plants use “once-through” cooling systems in which water withdrawn from a river, lake, or the sea absorbs heat as it cools the plant and is discharged back to its source before its temperature exceeds the regulated limit. Most new natural gas power plants use cooling towers and many recently retired coal plants used once-through cooling, which helps explain the drop in water withdrawals for power generation nationally.

Read more at Water Use Declining as Natural Gas Grows

Unprecedented June Heat in Northwest U.S. Caused by Extreme Jet Stream Pattern

A searing heat wave unprecedented for June scorched the Northwest U.S. and Western Canada on Saturday and Sunday. Temperatures soared to their highest June levels in recorded history for portions of Washington, Idaho, Montana, and British Columbia; both Idaho and Washington set all-time high temperature records for the month of June on Sunday. According to wunderground's weather historian, Christopher C. Burt, the 113°F measured in Walla Walla, Washington beat that state's previous June record of 112°F, set at John Day Dam on June 18, 1961. In addition, the 111°F reading at Lewiston, Idaho was that state's hottest June temperature on record. An automated station at Pittsburg Landing, Idaho hit 116°F, but that reading will have to be verified before being considered official. A slew of major stations set all-time June heat records on both Saturday and Sunday in Washington, Idaho, and Montana, and at least two tied their hottest temperature for any day in recorded history. A destructive wildfire hit Wenatchee, Washington overnight, destroying twelve buildings. Wenatchee set a new June record high of 109°F on Sunday, just one degree shy of their all-time record of 110°F set on July 17-18, 1941. Jon Erdman of TWC has full details of all the records set. Sunday will end up being the hottest day of the heat wave for most locations in the Northwest U.S. and Western Canada, but temperatures will still be 10 - 15°F above average most of the remainder of the week.

What caused the heat wave?

The planet as a whole has experienced its warmest January - May period on record this year, and it is much easier to set all-time heat records when your baseline temperature is at record warm levels. But all-time records require some unusual meteorology, and this week's heat wave was caused by an extreme jet stream configuration that featured a very sharp ridge of high pressure over Western North America and a compensating deep trough of low pressure over the Midwest United States. The ridge of high pressure allowed hot air from the Southwest U.S. to push northwards, and brought sunny skies that allowed plenty of solar heating of the ground.

An extreme jet stream configuration also was in evidence over Western Europe, where a strong ridge of high pressure on Sunday brought the warmest June temperatures ever recorded to the Spanish cities of Madrid and Toledo. This sort of extreme jet stream pattern has grown increasingly common in recent decades, as I wrote about for the December 2014 issue of Scientific American (behind a pay wall for $6.) A study published last week by researchers at Stanford University found that unusually intense and long-lived high pressure systems of the kind responsible for heat waves have increased over some parts of the globe since the advent of good satellite data in 1979. In particular, they found that in summertime, these patterns had increased over Europe, western Asia, and eastern North America. As yet, scientists have not come to a consensus on what might be causing the jet stream to behave in such an extreme fashion, though one leading theory is that rapid warming of the Arctic that has led to record sea ice and spring snow cover loss might be responsible.

Read more at Unprecedented June Heat in Northwest U.S. Caused by Extreme Jet Stream Pattern

What caused the heat wave?

The planet as a whole has experienced its warmest January - May period on record this year, and it is much easier to set all-time heat records when your baseline temperature is at record warm levels. But all-time records require some unusual meteorology, and this week's heat wave was caused by an extreme jet stream configuration that featured a very sharp ridge of high pressure over Western North America and a compensating deep trough of low pressure over the Midwest United States. The ridge of high pressure allowed hot air from the Southwest U.S. to push northwards, and brought sunny skies that allowed plenty of solar heating of the ground.

An extreme jet stream configuration also was in evidence over Western Europe, where a strong ridge of high pressure on Sunday brought the warmest June temperatures ever recorded to the Spanish cities of Madrid and Toledo. This sort of extreme jet stream pattern has grown increasingly common in recent decades, as I wrote about for the December 2014 issue of Scientific American (behind a pay wall for $6.) A study published last week by researchers at Stanford University found that unusually intense and long-lived high pressure systems of the kind responsible for heat waves have increased over some parts of the globe since the advent of good satellite data in 1979. In particular, they found that in summertime, these patterns had increased over Europe, western Asia, and eastern North America. As yet, scientists have not come to a consensus on what might be causing the jet stream to behave in such an extreme fashion, though one leading theory is that rapid warming of the Arctic that has led to record sea ice and spring snow cover loss might be responsible.

Read more at Unprecedented June Heat in Northwest U.S. Caused by Extreme Jet Stream Pattern

China Just Made Its Plans to Fight Climate Change Official

On Tuesday, China released long-awaited final greenhouse gas targets as part of its submission to the United Nations climate talks in Paris later this year.

Li Keqiang, China’s prime minister, said in a statement the country “will work hard” to peak its CO2 emissions before 2030, which was its previous commitment as part of the United States-China joint pledge from November 2014, the first time China had agreed to mitigate emissions.

The statement also said that China will cut its carbon intensity, or greenhouse gas emissions per unit of GDP, by 60-65 percent from 2005 levels by 2030, a large increase from its 40-45 percent goal for 2020.

...

“China has already achieved a 33 percent reduction in the carbon intensity of its booming economy since 2005, and last month the government ordered its manufacturers to cut current levels by a further 40 percent by 2025,” writes Stian Reklev at Carbon Pulse.

The statement also reaffirms China’s goal of increasing non-fossil fuel sources of energy consumption to about 20 percent by 2030.

...

While these are not bold new targets, they are of critical importance to the international negotiations surrounding the climate talks at the end of the year in which leaders hope to establish a post-2020 agreement that applies to all nations. China is the world’s second largest economy and biggest greenhouse gas emitter, and no deal would be achievable without their cooperation.

With China officially submitting its Intended Nationally Determined Contribution (INDC) to the UNFCCC, the world’s three largest carbon polluters, including the United States and the European Union, have all made commitments ahead of the Paris Summit. The United States plans to to reduce emissions by 26-28 percent below 2005 levels by 2025, and to make its best efforts to reduce by them by 28 percent. EU leaders have agreed to a 2030 greenhouse gas reduction target of at least 40 percent compared to 1990.

Read more at China Just Made Its Plans to Fight Climate Change Official

Li Keqiang, China’s prime minister, said in a statement the country “will work hard” to peak its CO2 emissions before 2030, which was its previous commitment as part of the United States-China joint pledge from November 2014, the first time China had agreed to mitigate emissions.

The statement also said that China will cut its carbon intensity, or greenhouse gas emissions per unit of GDP, by 60-65 percent from 2005 levels by 2030, a large increase from its 40-45 percent goal for 2020.

...

“China has already achieved a 33 percent reduction in the carbon intensity of its booming economy since 2005, and last month the government ordered its manufacturers to cut current levels by a further 40 percent by 2025,” writes Stian Reklev at Carbon Pulse.

The statement also reaffirms China’s goal of increasing non-fossil fuel sources of energy consumption to about 20 percent by 2030.

...

While these are not bold new targets, they are of critical importance to the international negotiations surrounding the climate talks at the end of the year in which leaders hope to establish a post-2020 agreement that applies to all nations. China is the world’s second largest economy and biggest greenhouse gas emitter, and no deal would be achievable without their cooperation.

With China officially submitting its Intended Nationally Determined Contribution (INDC) to the UNFCCC, the world’s three largest carbon polluters, including the United States and the European Union, have all made commitments ahead of the Paris Summit. The United States plans to to reduce emissions by 26-28 percent below 2005 levels by 2025, and to make its best efforts to reduce by them by 28 percent. EU leaders have agreed to a 2030 greenhouse gas reduction target of at least 40 percent compared to 1990.

Read more at China Just Made Its Plans to Fight Climate Change Official

More than 8,400 Arrested in China for Environmental Crimes in 2014

Chinese police arrested thousands of people suspected of environmental crimes last year, a minister told parliament Monday, while at the same time vowing to get serious about protecting the environment.

Environment Minister Chen Jining told a bi-monthly session of the National People's Congress' standing committee that the number of criminal cases handed over to the police by environmental protection departments in 2014 reached 2,080, twice the total number during the previous decade. More than 8,400 people were arrested, according to a transcript of Chen's address published on the parliament's website.

Facing mounting public pressure, leaders in Beijing have declared a war on pollution, saying they will abandon a decades-old growth-at-all-costs economic model that has spoiled much of China's water, skies and soil.

But forcing growth-obsessed local governments and powerful state-owned enterprises to comply with the new laws and standards has become one of the Chinese government’s biggest challenges.

“When Deng Xiaoping came to power, he decentralized power to the local government to stimulate economic growth. In essence he created a federal system with no checks,” said Jennifer Turner, director of the China Environment Forum at the Wilson Center, referring to the reformist Chinese leader who took power in the 1970s. “Now the Chinese government has been playing catch up. They’ve been trying to create environmental regulations and laws and campaigns to check pollution. In the 1990s, this was a halfhearted check, because the Communist Party and government needed the economy to develop … Now that the pollution is a true threat to economic development, they’ve got to deal with it.”

According to a 2013 report from the World Bank, environmental degradation and resource depletion costs China about 9 percent of its Gross National Income.

Read more at More than 8,400 Arrested in China for Environmental Crimes in 2014

Environment Minister Chen Jining told a bi-monthly session of the National People's Congress' standing committee that the number of criminal cases handed over to the police by environmental protection departments in 2014 reached 2,080, twice the total number during the previous decade. More than 8,400 people were arrested, according to a transcript of Chen's address published on the parliament's website.

Facing mounting public pressure, leaders in Beijing have declared a war on pollution, saying they will abandon a decades-old growth-at-all-costs economic model that has spoiled much of China's water, skies and soil.

But forcing growth-obsessed local governments and powerful state-owned enterprises to comply with the new laws and standards has become one of the Chinese government’s biggest challenges.

“When Deng Xiaoping came to power, he decentralized power to the local government to stimulate economic growth. In essence he created a federal system with no checks,” said Jennifer Turner, director of the China Environment Forum at the Wilson Center, referring to the reformist Chinese leader who took power in the 1970s. “Now the Chinese government has been playing catch up. They’ve been trying to create environmental regulations and laws and campaigns to check pollution. In the 1990s, this was a halfhearted check, because the Communist Party and government needed the economy to develop … Now that the pollution is a true threat to economic development, they’ve got to deal with it.”

According to a 2013 report from the World Bank, environmental degradation and resource depletion costs China about 9 percent of its Gross National Income.

Read more at More than 8,400 Arrested in China for Environmental Crimes in 2014

Monday, June 29, 2015

The Supreme Court Will Allow Coal Plants to Emit Unlimited Mercury

Power plants will continue to be able to emit unlimited mercury, arsenic, and other pollutants thanks to the Supreme Court, which on Monday invalidated the first-ever U.S. regulations to limit toxic heavy metal pollution from coal and oil-fired plants.

In a 5-4 ruling, the Supreme Court found fault with the Environmental Protection Agency’s Mercury and Air Toxic Standards, commonly referred to as MATS.

The EPA had been trying to implement a rule that cut down on toxic mercury pollution for more than two decades. But the Supreme Court majority opinion, written by Justice Antonin Scalia, said the EPA acted unlawfully when it failed to consider how much the regulation would cost the power industry before deciding to craft the rule.

“EPA must consider cost — including cost of compliance — before deciding whether regulation is appropriate and necessary,” the opinion reads. “It will be up to the [EPA] to decide (as always, within the limits of reasonable interpretation) how to account for cost.”

The decision doesn’t mean power plants will never be subject to regulations on toxic air pollutants. Instead, it means the EPA will have to go back to the drawing board, and find some mechanism to consider how much it will cost the power industry. Until another version of MATS is approved — a process that often takes years — power plants will have no limits on their emissions of mercury, arsenic, chromium, and other toxins. In other words, the rule is effectively delayed.

Delaying the EPA’s proposed limits on mercury pollution from power plants has been a priority among some Congressional Republicans since at least 2011. Back then, Rep. Ed Whitfield (R-KY) — who is also trying to delay the EPA’s regulation on carbon emissions — said he didn’t think the mercury rule could legally be repealed, but that “if we can delay the final rule … then I think we’ve accomplished a lot.”

Coal- and oil-fired power plants are the largest industrial sources of toxic air pollution in the country. Power plants are responsible for 50 percent of all U.S. emissions of mercury, a neurotoxin particularly dangerous to unborn children. If the rule had been allowed to remain in place, the EPA estimated that 11,000 premature deaths would be prevented every year; that IQ loss to children exposed to mercury in the womb would be reduced; and that there would be annual monetized benefits of between $37 billion and $90 billion.

However, Monday’s decision surrounded not health benefits, but cost to industry. The lawsuit, brought by Michigan and 19 other Republican-led states, argued that the EPA didn’t consider how much it would cost the power industry before it decided to craft the regulations. Indeed, the EPA did not consider cost when it initially decided to issue a regulation on heavy metal emissions. In the first stages of regulation development, the EPA usually only considers whether a pollutant poses a threat to human health and the environment.

The EPA does, however, consider cost in the later stages of Clean Air Act regulation. Specifically, it considers cost when deciding what the specific limits on a pollutant should be. In the case of MATS, the EPA justified the estimated $9.6 billion yearly price tag for the proposed rule by also estimating a $37 billion to $90 billion yearly benefit.

...

While environmentalists may be disappointed with the ruling, Bloomberg Business pointed out on Wednesday that there could be a silver lining. Pro-coal states have been using MATS as a key argument against the EPA’s proposed regulations on carbon emissions from power plants, regulations seen as important to fighting climate change. According to that argument, MATS precludes the carbon rule, since MATS already regulates pollutants from power plants.

If MATS is invalidated, the article asserts, the preclusion argument might go out the window with it. Then, the EPA would essentially be free to regulate carbon dioxide, and would also be free to regulate mercury at a later date. You can read more about how that would work here.

Read more at The Supreme Court Will Allow Coal Plants to Emit Unlimited Mercury

In a 5-4 ruling, the Supreme Court found fault with the Environmental Protection Agency’s Mercury and Air Toxic Standards, commonly referred to as MATS.

The EPA had been trying to implement a rule that cut down on toxic mercury pollution for more than two decades. But the Supreme Court majority opinion, written by Justice Antonin Scalia, said the EPA acted unlawfully when it failed to consider how much the regulation would cost the power industry before deciding to craft the rule.

“EPA must consider cost — including cost of compliance — before deciding whether regulation is appropriate and necessary,” the opinion reads. “It will be up to the [EPA] to decide (as always, within the limits of reasonable interpretation) how to account for cost.”

The decision doesn’t mean power plants will never be subject to regulations on toxic air pollutants. Instead, it means the EPA will have to go back to the drawing board, and find some mechanism to consider how much it will cost the power industry. Until another version of MATS is approved — a process that often takes years — power plants will have no limits on their emissions of mercury, arsenic, chromium, and other toxins. In other words, the rule is effectively delayed.

Delaying the EPA’s proposed limits on mercury pollution from power plants has been a priority among some Congressional Republicans since at least 2011. Back then, Rep. Ed Whitfield (R-KY) — who is also trying to delay the EPA’s regulation on carbon emissions — said he didn’t think the mercury rule could legally be repealed, but that “if we can delay the final rule … then I think we’ve accomplished a lot.”

Coal- and oil-fired power plants are the largest industrial sources of toxic air pollution in the country. Power plants are responsible for 50 percent of all U.S. emissions of mercury, a neurotoxin particularly dangerous to unborn children. If the rule had been allowed to remain in place, the EPA estimated that 11,000 premature deaths would be prevented every year; that IQ loss to children exposed to mercury in the womb would be reduced; and that there would be annual monetized benefits of between $37 billion and $90 billion.

However, Monday’s decision surrounded not health benefits, but cost to industry. The lawsuit, brought by Michigan and 19 other Republican-led states, argued that the EPA didn’t consider how much it would cost the power industry before it decided to craft the regulations. Indeed, the EPA did not consider cost when it initially decided to issue a regulation on heavy metal emissions. In the first stages of regulation development, the EPA usually only considers whether a pollutant poses a threat to human health and the environment.

The EPA does, however, consider cost in the later stages of Clean Air Act regulation. Specifically, it considers cost when deciding what the specific limits on a pollutant should be. In the case of MATS, the EPA justified the estimated $9.6 billion yearly price tag for the proposed rule by also estimating a $37 billion to $90 billion yearly benefit.

...

While environmentalists may be disappointed with the ruling, Bloomberg Business pointed out on Wednesday that there could be a silver lining. Pro-coal states have been using MATS as a key argument against the EPA’s proposed regulations on carbon emissions from power plants, regulations seen as important to fighting climate change. According to that argument, MATS precludes the carbon rule, since MATS already regulates pollutants from power plants.

If MATS is invalidated, the article asserts, the preclusion argument might go out the window with it. Then, the EPA would essentially be free to regulate carbon dioxide, and would also be free to regulate mercury at a later date. You can read more about how that would work here.

Read more at The Supreme Court Will Allow Coal Plants to Emit Unlimited Mercury

Extreme Makeover: Humankind's Unprecedented Transformation of Earth

Human beings are pushing the planet in an entirely new direction with revolutionary implications for its life, a new study by researchers at the University of Leicester has suggested.

The research team led by Professor Mark Williams from the University of Leicester's Department of Geology has published their findings in a new paper entitled 'The Anthropocene Biosphere' in The Anthropocene Review.

Professor Jan Zalasiewicz from the University of Leicester's Department of Geology who was involved in the study explained the research: "We are used to seeing headlines daily about environmental crises: global warming, ocean acidification, pollution of all kinds, looming extinctions. These changes are advancing so rapidly, that the concept that we are living in a new geological period of time, the Anthropocene Epoch - proposed by the Nobel Prize-winning atmospheric chemist Paul Crutzen - is now in wide currency, with new and distinctive rock strata being formed that will persist far into the future.

"But what is really new about this chapter in Earth history, the one we're living through? Episodes of global warming, ocean acidification and mass extinction have all happened before, well before humans arrived on the planet. We wanted to see if there was something different about what is happening now."

The team examined what makes the Anthropocene special and different from previous crises in Earth's history. They identified four key changes:

Read more at Extreme Makeover: Humankind's Unprecedented Transformation of Earth

The research team led by Professor Mark Williams from the University of Leicester's Department of Geology has published their findings in a new paper entitled 'The Anthropocene Biosphere' in The Anthropocene Review.

Professor Jan Zalasiewicz from the University of Leicester's Department of Geology who was involved in the study explained the research: "We are used to seeing headlines daily about environmental crises: global warming, ocean acidification, pollution of all kinds, looming extinctions. These changes are advancing so rapidly, that the concept that we are living in a new geological period of time, the Anthropocene Epoch - proposed by the Nobel Prize-winning atmospheric chemist Paul Crutzen - is now in wide currency, with new and distinctive rock strata being formed that will persist far into the future.

"But what is really new about this chapter in Earth history, the one we're living through? Episodes of global warming, ocean acidification and mass extinction have all happened before, well before humans arrived on the planet. We wanted to see if there was something different about what is happening now."

The team examined what makes the Anthropocene special and different from previous crises in Earth's history. They identified four key changes:

- The homogenization of species around the world through mass, human-instigated species invasions -- nothing on this global scale has happened before

- One species, Homo sapiens, is now in effect the top predator on land and in the sea, and has commandeered for its use over a quarter of global biological productivity. There has never been a single species of such reach and power previously

- There is growing direction of evolution of other species by Homo sapiens

- There is growing interaction of the biosphere with the 'technosphere' -- a concept pioneered by one of the team members, Professor Peter Haff of Duke University -- the sum total of all human-made manufactured machines and objects, and the systems that control them

Read more at Extreme Makeover: Humankind's Unprecedented Transformation of Earth

Retreating Sea Ice Linked to Changes in Ocean Circulation, Could Affect European Climate

Retreating sea ice in the Iceland and Greenland Seas may be changing the circulation of warm and cold water in the Atlantic Ocean, and could ultimately impact the climate in Europe, says a new study by an atmospheric physicist from the University of Toronto Mississauga (UTM) and his colleagues in Great Britain, Norway and the United States.

"A warm western Europe requires a cold North Atlantic Ocean, and the warming that the North Atlantic is now experiencing has the potential to result in a cooling over western Europe," says professor G.W.K. Moore of UTM's Department of Chemical & Physical Sciences.

As global warming affects the earth and ocean, the retreat of the sea ice means there won't be as much cold, dense water, generated through a process known as oceanic convection, created to flow south and feed the Gulf Stream. If convection decreases, says Moore, the Gulf Stream may weaken, thereby reducing the warming of the atmosphere, in comparison to today.

Their research, published in Nature Climate Change on June 29, is the first attempt to examine and document these changes in the air-sea heat exchange in the region -- brought about by global warming -- and to consider its possible impact on oceanic circulation, including the climatologically important Atlantic Meridional Overturning Circulation.

Previous studies have focused instead on the changing salinity of the northern seas and its effects on ocean circulation.

...

The Iceland and Greenland Seas are among the only places worldwide where conditions are right and this heat exchange is able to change the ocean's density enough to cause the surface waters to sink. The largest air-sea heat exchange in these seas occurs at the edge of the sea ice.

In the past, this region of maximum heat exchange has coincided with the location where oceanic conditions are optimal for convection to occur. However, in recent years, the sea ice has retreated and with it the region of maximum heat exchange. As a result, there has been a reduction in the heat exchange over the locations where sinking occurs in the ocean. This has the potential to weaken oceanic convection in the Greenland and Iceland Seas.

Read more at Retreating Sea Ice Linked to Changes in Ocean Circulation, Could Affect European Climate

"A warm western Europe requires a cold North Atlantic Ocean, and the warming that the North Atlantic is now experiencing has the potential to result in a cooling over western Europe," says professor G.W.K. Moore of UTM's Department of Chemical & Physical Sciences.

As global warming affects the earth and ocean, the retreat of the sea ice means there won't be as much cold, dense water, generated through a process known as oceanic convection, created to flow south and feed the Gulf Stream. If convection decreases, says Moore, the Gulf Stream may weaken, thereby reducing the warming of the atmosphere, in comparison to today.

Their research, published in Nature Climate Change on June 29, is the first attempt to examine and document these changes in the air-sea heat exchange in the region -- brought about by global warming -- and to consider its possible impact on oceanic circulation, including the climatologically important Atlantic Meridional Overturning Circulation.

Previous studies have focused instead on the changing salinity of the northern seas and its effects on ocean circulation.

...

The Iceland and Greenland Seas are among the only places worldwide where conditions are right and this heat exchange is able to change the ocean's density enough to cause the surface waters to sink. The largest air-sea heat exchange in these seas occurs at the edge of the sea ice.

In the past, this region of maximum heat exchange has coincided with the location where oceanic conditions are optimal for convection to occur. However, in recent years, the sea ice has retreated and with it the region of maximum heat exchange. As a result, there has been a reduction in the heat exchange over the locations where sinking occurs in the ocean. This has the potential to weaken oceanic convection in the Greenland and Iceland Seas.

Read more at Retreating Sea Ice Linked to Changes in Ocean Circulation, Could Affect European Climate

Sunday, June 28, 2015

Fuels from Canadian Oil Sands Have Larger Carbon Footprint, Analysis Finds

Gasoline and diesel refined from Canadian oil sands have a significantly larger carbon footprint and climate impact than fuels from conventional crude sources, according to an analysis by the U.S. Department of Energy's Argonne National Laboratory. Oil sands-derived fuels will release on average 20 percent more carbon into the atmosphere over their lifetime — and possibly up to 24 percent more — depending on how they are extracted and refined, the study says. Methane emissions from tailing ponds and carbon emissions from land disturbance and field operations also contribute to the higher carbon footprint.

"This is important information about the greenhouse gas impact of this oil source, and this is the first time it has been made available at this level of fidelity," said Hao Cai, the Argonne researcher who led the study. Roughly 9 percent of the total crude processed in U.S. refineries in 2013 came from the Canadian oil sands, and that percentage is projected to rise to 14 percent by 2020.

Read original article at Fuels from Canadian Oil Sands Have Larger Carbon Footprint, Analysis Finds

"This is important information about the greenhouse gas impact of this oil source, and this is the first time it has been made available at this level of fidelity," said Hao Cai, the Argonne researcher who led the study. Roughly 9 percent of the total crude processed in U.S. refineries in 2013 came from the Canadian oil sands, and that percentage is projected to rise to 14 percent by 2020.

Read original article at Fuels from Canadian Oil Sands Have Larger Carbon Footprint, Analysis Finds

On the Legal Front - by James Hansen

The only way to win the carbon war soon enough to avert unacceptable casualties of young people and other life on the planet is to carry out the battle on several fronts simultaneously. (That’s the reason for the expansive name of our organization, Climate Science, Awareness and Solutions (CSAS), and also the reason that we support disparate organizations, including Citizens Climate Lobby, Our Children’s Trust, 350.org…).

We sometimes ask if we are using our time right, spending too much time on the legal front? After all, the end game begins only when we achieve an across-the-board transparent (thank you, Pope Francis, for recognizing that!) carbon fee. Focus on a carbon fee is high priority because of the danger that Paris agreements may amount to little more than national “goals” and, what’s worse, more effete ineffectual “cap-and-trade” shenanigans. A focus on science is also needed, as there is still no widespread recognition of the urgency of emission reductions, and better understanding of the science is required to achieve a good strategy to restore energy balance.

The legal approach complements recognition of the moral dimensions of climate change. Can you imagine civil rights advancing without help of the courts? Yet courts will not likely move, and they did not move in the case of civil rights, until the public recognizes the moral dimension and begins to demand action. So it is also essential to get the public more widely involved.

When a judge issues a ruling it has certain gravity. It seems that courts retain more respect with the public than legislatures do. So it is wonderful to report two important legal victories this week. Both are due to remarkably capable, determined individuals, who simply will not give up.

First, the Dutch case. The Netherlands, which will cease to exist within a century or so if the world stays on its present carbon path, is an appropriate place for the first European case in which citizens attempt to hold a state responsible for its inaction in the face of clear danger. The Dutch district court in the Hague ruled for the plaintiff, Urgenda, an environmental organization. The court ordered the Dutch government to reduce emissions 25% by 2020, a stiff order. The hero behind the scenes was lawyer, legal scholar, and author Roger Cox, who has relentlessly pursued this action for the past several years on behalf of young people and future generations.

In Seattle, the King County Superior Court Judge ordered the State to reconsider the petition of eight youth, who brought their case with the help of Our Children’s Trust, requesting that the state reduce emissions consistent with dictates of the best available science. The latter was provided in testimony to the court by Pushker Kharecha, Deputy Director of CSAS, based on our paper in PLOS One, which was not disputed by Washington State, and which calls for a reduction of emissions by 6% per year. The relentless behind-the-scenes champions in this case are Julia Olson (director of Our Children’s Trust) and legal scholar Mary Woods.

Read more at On the Legal Front - by James Hansen

We sometimes ask if we are using our time right, spending too much time on the legal front? After all, the end game begins only when we achieve an across-the-board transparent (thank you, Pope Francis, for recognizing that!) carbon fee. Focus on a carbon fee is high priority because of the danger that Paris agreements may amount to little more than national “goals” and, what’s worse, more effete ineffectual “cap-and-trade” shenanigans. A focus on science is also needed, as there is still no widespread recognition of the urgency of emission reductions, and better understanding of the science is required to achieve a good strategy to restore energy balance.

The legal approach complements recognition of the moral dimensions of climate change. Can you imagine civil rights advancing without help of the courts? Yet courts will not likely move, and they did not move in the case of civil rights, until the public recognizes the moral dimension and begins to demand action. So it is also essential to get the public more widely involved.

When a judge issues a ruling it has certain gravity. It seems that courts retain more respect with the public than legislatures do. So it is wonderful to report two important legal victories this week. Both are due to remarkably capable, determined individuals, who simply will not give up.

First, the Dutch case. The Netherlands, which will cease to exist within a century or so if the world stays on its present carbon path, is an appropriate place for the first European case in which citizens attempt to hold a state responsible for its inaction in the face of clear danger. The Dutch district court in the Hague ruled for the plaintiff, Urgenda, an environmental organization. The court ordered the Dutch government to reduce emissions 25% by 2020, a stiff order. The hero behind the scenes was lawyer, legal scholar, and author Roger Cox, who has relentlessly pursued this action for the past several years on behalf of young people and future generations.

In Seattle, the King County Superior Court Judge ordered the State to reconsider the petition of eight youth, who brought their case with the help of Our Children’s Trust, requesting that the state reduce emissions consistent with dictates of the best available science. The latter was provided in testimony to the court by Pushker Kharecha, Deputy Director of CSAS, based on our paper in PLOS One, which was not disputed by Washington State, and which calls for a reduction of emissions by 6% per year. The relentless behind-the-scenes champions in this case are Julia Olson (director of Our Children’s Trust) and legal scholar Mary Woods.

Read more at On the Legal Front - by James Hansen

Google to Convert Coal Power Plant to Data Center

Google will convert an old coal-fired power plant in rural Alabama into a data center powered by renewable power, expanding the company’s move into the energy world.

The technology giant said on Wednesday that it would open its 14th data center inside the grounds of the old coal plant, and had reached a deal with the Tennessee Valley Authority, the region’s power company, to supply the project with renewable sources of electricity. With the coal plant rehab, Google solidifies a reputation among tech companies for promoting clean energy.

“It’s very important symbolism to take an old coal plant that is a relic of the old energy system and convert it into a data center that will be powered by renewable energy,” said David Pomerantz, climate and energy campaigner for Greenpeace.

Michael Terrell, who leads energy market strategy for Google’s infrastructure team, said the company saw clear benefits in taking over the old coal facility. “There is an enormous opportunity when you take over the infrastructure that is there – the transmissions lines and the water intakes – and use that to power a data center that is powered by renewable energy,” he said.

Outside utilities, Google claims to be the largest user of renewable energy in the U.S. The company says it uses 1.5 percent of wind power capacity in the U.S., and has plans to bring in more alternatives over the next year.

However, the company overall still treads with a relatively heavy carbon footprint compared to Apple.

About 46 percent of Google’s data centers are powered by renewable energy. Apple’s in contrast are powered 100 percent by clean energy, according to Greenpeace. Google is committed to going 100 percent renewable, but has no target date.

Google went into the renewable energy business earlier with data centers in Iowa and Oklahoma. However, the company still has three data centers in south-eastern states which rely heavily on coal and nuclear power, North Carolina, South Carolina, and Georgia.

Pomerantz said the move into Alabama was so encouraging because it challenged that dominance.

The company said it would be working directly with TVA to bring more wind power into the grid. “We see a lot of value in redeveloping big industrial sites like this. There is a lot of electric and other infrastructure that we can re-use,” Matt Kalman, a Google spokesman said in an email.

Read more at Google to Convert Coal Power Plant to Data Center

The technology giant said on Wednesday that it would open its 14th data center inside the grounds of the old coal plant, and had reached a deal with the Tennessee Valley Authority, the region’s power company, to supply the project with renewable sources of electricity. With the coal plant rehab, Google solidifies a reputation among tech companies for promoting clean energy.

“It’s very important symbolism to take an old coal plant that is a relic of the old energy system and convert it into a data center that will be powered by renewable energy,” said David Pomerantz, climate and energy campaigner for Greenpeace.

Michael Terrell, who leads energy market strategy for Google’s infrastructure team, said the company saw clear benefits in taking over the old coal facility. “There is an enormous opportunity when you take over the infrastructure that is there – the transmissions lines and the water intakes – and use that to power a data center that is powered by renewable energy,” he said.

Outside utilities, Google claims to be the largest user of renewable energy in the U.S. The company says it uses 1.5 percent of wind power capacity in the U.S., and has plans to bring in more alternatives over the next year.

However, the company overall still treads with a relatively heavy carbon footprint compared to Apple.

About 46 percent of Google’s data centers are powered by renewable energy. Apple’s in contrast are powered 100 percent by clean energy, according to Greenpeace. Google is committed to going 100 percent renewable, but has no target date.

Google went into the renewable energy business earlier with data centers in Iowa and Oklahoma. However, the company still has three data centers in south-eastern states which rely heavily on coal and nuclear power, North Carolina, South Carolina, and Georgia.

Pomerantz said the move into Alabama was so encouraging because it challenged that dominance.

The company said it would be working directly with TVA to bring more wind power into the grid. “We see a lot of value in redeveloping big industrial sites like this. There is a lot of electric and other infrastructure that we can re-use,” Matt Kalman, a Google spokesman said in an email.

Read more at Google to Convert Coal Power Plant to Data Center

Scotland Got Half Its Electricity from Renewables in 2014

New data from the Scottish government shows that the country generated 49.8 percent of its electricity from renewables in 2014, effectively meeting its target of generating half of electricity demand from clean sources by the end of this year.

The milestone means the 50 percent target was met a year early, with overall total renewable generation up 5.4 percent from 2013. The next benchmark in the government’s plan is to generate enough renewable energy to power 100 percent of the country’s demand by 2020.

Results from the first quarter of 2015 show that growth is continuing at a rapid rate. Scottish wind farms produced a record amount of power in the first three months of this year, up 4.3 percent from the first quarter of 2014. The wind farms produced a total of 4,452 gigawatt hours (GWh), enough to power some one million U.K. homes for a year.

Thanks to a massive investment in onshore and offshore wind, Scotland has established itself as a renewable energy leader in the region. According to the new figures, Scotland’s renewable electricity generation of just over 19,000 GWh made up about 30 percent of the U.K.’s total renewable generation in 2014. More than half of this came from wind, with nearly another third coming from hydropower. Only 137.9 GWh came from solar.

While Scotland’s renewable energy sector is currently thriving, prospects are not necessarily as sunny going forward. Last week the U.K. government, led by recently re-elected conservative Prime Minister David Cameron, announced intentions to end new subsidies for onshore wind farms next April. Energy and Climate Change Secretary Amber Rudd said that “onshore wind is an important part of our energy mix,” but that the U.K. now has enough “subsidized projects in the pipeline to meet our renewable energy commitments.”

The U.K. has an overall binding target of getting 15 percent of the energy it uses for heat, transport, and power from clean sources by 2020. On Thursday, the Department of Energy and Climate Change announced that the share of renewables in 2014 was 6.3 percent, ahead of the interim 2014 target of 5.4 percent.

The amount of electricity generated from renewable sources in the U.K. in 2014 was 64,654 GWh, a 21 percent increase on 2013. The greatest increase in renewable generation came from biomass, which has become a controversial source of power due to the local environmental impacts of logging and the greenhouse gas emissions associated with transporting the fuel, oftentimes from American forests across the Atlantic. Wind energy still accounted for about half of all renewable generation in the U.K.

Read more at Scotland Got Half Its Electricity from Renewables in 2014

The milestone means the 50 percent target was met a year early, with overall total renewable generation up 5.4 percent from 2013. The next benchmark in the government’s plan is to generate enough renewable energy to power 100 percent of the country’s demand by 2020.

Results from the first quarter of 2015 show that growth is continuing at a rapid rate. Scottish wind farms produced a record amount of power in the first three months of this year, up 4.3 percent from the first quarter of 2014. The wind farms produced a total of 4,452 gigawatt hours (GWh), enough to power some one million U.K. homes for a year.

Thanks to a massive investment in onshore and offshore wind, Scotland has established itself as a renewable energy leader in the region. According to the new figures, Scotland’s renewable electricity generation of just over 19,000 GWh made up about 30 percent of the U.K.’s total renewable generation in 2014. More than half of this came from wind, with nearly another third coming from hydropower. Only 137.9 GWh came from solar.

While Scotland’s renewable energy sector is currently thriving, prospects are not necessarily as sunny going forward. Last week the U.K. government, led by recently re-elected conservative Prime Minister David Cameron, announced intentions to end new subsidies for onshore wind farms next April. Energy and Climate Change Secretary Amber Rudd said that “onshore wind is an important part of our energy mix,” but that the U.K. now has enough “subsidized projects in the pipeline to meet our renewable energy commitments.”

The U.K. has an overall binding target of getting 15 percent of the energy it uses for heat, transport, and power from clean sources by 2020. On Thursday, the Department of Energy and Climate Change announced that the share of renewables in 2014 was 6.3 percent, ahead of the interim 2014 target of 5.4 percent.

The amount of electricity generated from renewable sources in the U.K. in 2014 was 64,654 GWh, a 21 percent increase on 2013. The greatest increase in renewable generation came from biomass, which has become a controversial source of power due to the local environmental impacts of logging and the greenhouse gas emissions associated with transporting the fuel, oftentimes from American forests across the Atlantic. Wind energy still accounted for about half of all renewable generation in the U.K.

Read more at Scotland Got Half Its Electricity from Renewables in 2014

Saturday, June 27, 2015

Clean Energy Potential Gets Short Shrift in Policymaking, Group Says

Faulty projections by EIA on renewable energy growth are being used in critical policies like the Clean Power Plan, a trade group says.

The Energy Information Administration—the federal agency responsible for forecasting energy trends—has consistently and significantly underestimated the potential of renewable energy sources, misinforming Congress, government agencies and others that use the forecasts to analyze and develop policies, according to a report by a trade group of clean energy companies.

Wind generating capacity, for example, has increased on average by about 6.5 gigawatts each of the past 8 years, according to the report released Monday by Advanced Energy Economy, the trade association. But the EIA has projected that only 6.5 gigawatts would be added between 2017 and 2030. Similarly, forecasts from industry groups that take projects in the pipeline into account show solar energy generation doubling by 2016 as solar costs plummet, while the EIA projects the doubling will take 10 more years.

"If the EIA has a lag on their assumptions, that might get perpetuated into other work," said Ryan Katofsky, lead author of the report and director of industry analysis at Advanced Energy Economy. The group's members include General Electric, SolarCity and SunPower, some of the largest U.S. wind and solar companies. The findings align with those of other environmental groups and the Lawrence Berkeley National Laboratory.

The EPA relied on EIA estimates in developing national carbon regulations for power plants, known as the Clean Power Plan. The rules set state-level emission reduction targets, which the EPA partly calculated by estimating the potential for renewable energy generation. Because the EPA used the EIA's assumptions, it underestimated renewables growth and set less ambitious targets than it could have done, according to Katofsky and researchers at the Union of Concerned Scientists and other environmental groups.

In their comments to the EPA on the Clean Power Plan, several states—including Kentucky and Wyoming—and utilities said that shifting away from coal to natural gas and developing renewable energy and energy efficiency programs would be expensive and a burden on the public. But according to the report, the costs of solar are already about half of what the EIA estimates, and as renewable energy continues to capture a larger share of the market, states will be able to comply with the Clean Power Plan at a low cost.

Clean Energy Potential Gets Short Shrift in Policymaking, Group Says

The Energy Information Administration—the federal agency responsible for forecasting energy trends—has consistently and significantly underestimated the potential of renewable energy sources, misinforming Congress, government agencies and others that use the forecasts to analyze and develop policies, according to a report by a trade group of clean energy companies.

Wind generating capacity, for example, has increased on average by about 6.5 gigawatts each of the past 8 years, according to the report released Monday by Advanced Energy Economy, the trade association. But the EIA has projected that only 6.5 gigawatts would be added between 2017 and 2030. Similarly, forecasts from industry groups that take projects in the pipeline into account show solar energy generation doubling by 2016 as solar costs plummet, while the EIA projects the doubling will take 10 more years.

"If the EIA has a lag on their assumptions, that might get perpetuated into other work," said Ryan Katofsky, lead author of the report and director of industry analysis at Advanced Energy Economy. The group's members include General Electric, SolarCity and SunPower, some of the largest U.S. wind and solar companies. The findings align with those of other environmental groups and the Lawrence Berkeley National Laboratory.

The EPA relied on EIA estimates in developing national carbon regulations for power plants, known as the Clean Power Plan. The rules set state-level emission reduction targets, which the EPA partly calculated by estimating the potential for renewable energy generation. Because the EPA used the EIA's assumptions, it underestimated renewables growth and set less ambitious targets than it could have done, according to Katofsky and researchers at the Union of Concerned Scientists and other environmental groups.

In their comments to the EPA on the Clean Power Plan, several states—including Kentucky and Wyoming—and utilities said that shifting away from coal to natural gas and developing renewable energy and energy efficiency programs would be expensive and a burden on the public. But according to the report, the costs of solar are already about half of what the EIA estimates, and as renewable energy continues to capture a larger share of the market, states will be able to comply with the Clean Power Plan at a low cost.

Clean Energy Potential Gets Short Shrift in Policymaking, Group Says

Climate Change Could Cause More Than $40 Billion in Damage to National Parks

A report released this week by the National Park Service (NPS) found that sea level rise — a phenomenon caused by climate change — could cause more than $40 billion in damage to America’s national parks.

The report, released in time for the two-year anniversary of the announcement of President Obama’s Climate Action Plan, examines the effects of a sea level rise on 40 coastal national parks across the United States. The NPS study examined “assets” in each national park, defined as historic sites, infrastructure, museum collections, and other cultural resources, finding that over 39 percent of the 10,000 assets were categorized as “high-exposure” to sea-level rise caused by climate change.

In total, it found, damages to high-exposure assets would cost taxpayers more than $40 billion.

“Climate change is visible at national parks across the country, but this report underscores the economic importance of cutting carbon pollution and making public lands more resilient to its dangerous impacts,” said Department of the Interior Secretary Sally Jewell in a press release on Tuesday. Jewell also expressed hope that the NPS research could be used to “help protect some of America’s most iconic places.”

According to the study, low-lying coastal parks in the NPS’s Southeast Region will face the greatest risk for damage. Cost estimates of rebuilding infrastructure and assets at Cape Hatteras National Seashore, a prominent national park in North Carolina, stand at $1.2 billion. In addition to the Southeast regions, high exposure sites include the Statue of Liberty, Golden Gate, and the Redwoods.

Though the rise in sea level will vary based on location, in general scientists project that a one meter rise will occur over the next 100 to 150 years.

Read more at Climate Change Could Cause More Than $40 Billion in Damage to National Parks

The report, released in time for the two-year anniversary of the announcement of President Obama’s Climate Action Plan, examines the effects of a sea level rise on 40 coastal national parks across the United States. The NPS study examined “assets” in each national park, defined as historic sites, infrastructure, museum collections, and other cultural resources, finding that over 39 percent of the 10,000 assets were categorized as “high-exposure” to sea-level rise caused by climate change.

In total, it found, damages to high-exposure assets would cost taxpayers more than $40 billion.

“Climate change is visible at national parks across the country, but this report underscores the economic importance of cutting carbon pollution and making public lands more resilient to its dangerous impacts,” said Department of the Interior Secretary Sally Jewell in a press release on Tuesday. Jewell also expressed hope that the NPS research could be used to “help protect some of America’s most iconic places.”

According to the study, low-lying coastal parks in the NPS’s Southeast Region will face the greatest risk for damage. Cost estimates of rebuilding infrastructure and assets at Cape Hatteras National Seashore, a prominent national park in North Carolina, stand at $1.2 billion. In addition to the Southeast regions, high exposure sites include the Statue of Liberty, Golden Gate, and the Redwoods.

Though the rise in sea level will vary based on location, in general scientists project that a one meter rise will occur over the next 100 to 150 years.

Read more at Climate Change Could Cause More Than $40 Billion in Damage to National Parks

Leading Health Experts Call for Fossil Fuel Divestment to Avert Climate Change

'Divestment rests on the premise that it is wrong to profit from an industry whose core business threatens human and planetary health'

More than 50 of the world’s leading doctors and health researchers called on charities to divest from fossil fuel companies in an open letter Thursday. The letter, published in the Guardian, argues that climate change poses a dire risk to public health and that fossil fuel companies are unlikely to take action to reduce carbon emissions without prodding.

“Divestment rests on the premise that it is wrong to profit from an industry whose core business threatens human and planetary health,” the health experts wrote. The case for divestment brings “to mind one of the foundations of medical ethics—first, do no harm.”

The letter is the latest show of support for efforts to halt climate change from the medical community. Recent research has outlined a variety of public health issues caused by climate change, from heath stroke deaths to increased asthma rates. Just this week a study in The Lancet outlined how climate change could erode 50 years of health advances.

The open letter alluded to those impacts and suggested that divestment would be the best way for global charities to address them. Engaging with fossil fuel companies’ boards has not been shown to work, the researcher wrote, likening the oil industry to the tobacco industry.

“Our primary concern is that a decision not to divest will continue to bolster the social licence of an industry that has indicated no intention of taking meaningful action,” researchers wrote.

The long list of signatories include the editors of The Lancet and BMJ, leading medical journals, as well as medical professors from across the United Kingdom.The letter specifically calls on the Wellcome Trust and the Gates Foundation, two nonprofits that are leading contributors to global health causes, to divestment their multi-billion endowments from fossil fuel companies. Together the companies control total endowments worth more than $70 billion.

Read more at Leading Health Experts Call for Fossil Fuel Divestment to Avert Climate Change

More than 50 of the world’s leading doctors and health researchers called on charities to divest from fossil fuel companies in an open letter Thursday. The letter, published in the Guardian, argues that climate change poses a dire risk to public health and that fossil fuel companies are unlikely to take action to reduce carbon emissions without prodding.

“Divestment rests on the premise that it is wrong to profit from an industry whose core business threatens human and planetary health,” the health experts wrote. The case for divestment brings “to mind one of the foundations of medical ethics—first, do no harm.”

The letter is the latest show of support for efforts to halt climate change from the medical community. Recent research has outlined a variety of public health issues caused by climate change, from heath stroke deaths to increased asthma rates. Just this week a study in The Lancet outlined how climate change could erode 50 years of health advances.

The open letter alluded to those impacts and suggested that divestment would be the best way for global charities to address them. Engaging with fossil fuel companies’ boards has not been shown to work, the researcher wrote, likening the oil industry to the tobacco industry.

“Our primary concern is that a decision not to divest will continue to bolster the social licence of an industry that has indicated no intention of taking meaningful action,” researchers wrote.

The long list of signatories include the editors of The Lancet and BMJ, leading medical journals, as well as medical professors from across the United Kingdom.The letter specifically calls on the Wellcome Trust and the Gates Foundation, two nonprofits that are leading contributors to global health causes, to divestment their multi-billion endowments from fossil fuel companies. Together the companies control total endowments worth more than $70 billion.

Read more at Leading Health Experts Call for Fossil Fuel Divestment to Avert Climate Change

With '15 Energy Plan, N.Y. Bids to Become Cleanest Energy State in U.S.

New York made a bold declaration about clean energy Thursday with a state energy plan that promises a host of policy efforts aimed at scrubbing the state of fossil fuels and advancing a future heavy on distributed generation and renewable energy.

The much-anticipated document from Gov. Andrew Cuomo (D) lays out targets under the state's fast-moving "reforming the energy vision" (REV) for electricity and complementary programs for other areas. Taken together, the promised targets under those programs listed in the document would cut greenhouse gas emissions by 80 percent by 2050.

The targets by 2030 include: a 40 percent cut in greenhouse gases from 1990 levels, a 50 percent statewide goal for renewable generation and a 23 percent cut from 2012 levels for energy consumption in buildings.

Alongside these targets was an announcement by the New York State Energy Research and Development Authority that Cuomo will seek $5 billion over 10 years to support programs like the NY-Sun solar initiative and the New York Green Bank. That is in addition to a separate 10-year, $1.5 billion NYSERDA proposal to promote large-scale solar and wind projects.

The state energy plan, while nonbinding, seeks to set the tone as a kind of road map for the ongoing REV proceeding, as well as other programs. If one thing was clear in the far-reaching document, it was that the REV reforms -- aimed at overhauling the power grid in favor of distributed generation and demand -- will be crucial to achieve the ambitious goals set forth.

A frequently asked questions document attached to the plan describes three "pillars" to meet all the goals set out above: the REV process through the Public Service Commission, the NYSERDA Clean Energy Fund, and changes to the New York Power Authority's strategy to reduce energy demand and serve as a model for the state's private utilities.

As for the cost, the document describes incentives to come that will prod consumers into lowering their energy use, while REV is meant to "unleash new market opportunities" for new players seeking to capitalize on the state's proposed transformation.

The plan also warns about an over-reliance on natural gas and pledges to help avoid $30 billion in needed transmission upgrades over the next 10 years largely through REV. The argument is made that improving the state's generation load capacity utilization -- which is about 55 percent today -- will yield up to $330 million in annual savings to ratepayers by ending the need for peak generation in favor of a distributed grid.

"The overall system is both energy and capital inefficient," the energy plan says, adding that the answer is to "spend prudently the required capital on infrastructure improvements, in ways that improve the grid's overall system efficiency.

"Solutions that reduce or shift peak load such as demand management systems, energy efficiency and energy storage, most often require significantly less capital investment," the plan says. "These solutions should be seriously considered, wherever practical, as complementary to investments in smart transmission and distribution infrastructure to meet the system's reliability needs."

The document returns time and time again to REV, saying it will "unlock these savings by facilitating and encouraging investment (particularly private capital investment) in cost-effective, clean distributed energy resources and other solutions that will reduce peak load and improve system efficiency."

Read more at With '15 Energy Plan, N.Y. Bids to Become Cleanest Energy State in U.S.

The much-anticipated document from Gov. Andrew Cuomo (D) lays out targets under the state's fast-moving "reforming the energy vision" (REV) for electricity and complementary programs for other areas. Taken together, the promised targets under those programs listed in the document would cut greenhouse gas emissions by 80 percent by 2050.

The targets by 2030 include: a 40 percent cut in greenhouse gases from 1990 levels, a 50 percent statewide goal for renewable generation and a 23 percent cut from 2012 levels for energy consumption in buildings.

Alongside these targets was an announcement by the New York State Energy Research and Development Authority that Cuomo will seek $5 billion over 10 years to support programs like the NY-Sun solar initiative and the New York Green Bank. That is in addition to a separate 10-year, $1.5 billion NYSERDA proposal to promote large-scale solar and wind projects.

The state energy plan, while nonbinding, seeks to set the tone as a kind of road map for the ongoing REV proceeding, as well as other programs. If one thing was clear in the far-reaching document, it was that the REV reforms -- aimed at overhauling the power grid in favor of distributed generation and demand -- will be crucial to achieve the ambitious goals set forth.

A frequently asked questions document attached to the plan describes three "pillars" to meet all the goals set out above: the REV process through the Public Service Commission, the NYSERDA Clean Energy Fund, and changes to the New York Power Authority's strategy to reduce energy demand and serve as a model for the state's private utilities.

As for the cost, the document describes incentives to come that will prod consumers into lowering their energy use, while REV is meant to "unleash new market opportunities" for new players seeking to capitalize on the state's proposed transformation.

The plan also warns about an over-reliance on natural gas and pledges to help avoid $30 billion in needed transmission upgrades over the next 10 years largely through REV. The argument is made that improving the state's generation load capacity utilization -- which is about 55 percent today -- will yield up to $330 million in annual savings to ratepayers by ending the need for peak generation in favor of a distributed grid.

"The overall system is both energy and capital inefficient," the energy plan says, adding that the answer is to "spend prudently the required capital on infrastructure improvements, in ways that improve the grid's overall system efficiency.

"Solutions that reduce or shift peak load such as demand management systems, energy efficiency and energy storage, most often require significantly less capital investment," the plan says. "These solutions should be seriously considered, wherever practical, as complementary to investments in smart transmission and distribution infrastructure to meet the system's reliability needs."

The document returns time and time again to REV, saying it will "unlock these savings by facilitating and encouraging investment (particularly private capital investment) in cost-effective, clean distributed energy resources and other solutions that will reduce peak load and improve system efficiency."

Read more at With '15 Energy Plan, N.Y. Bids to Become Cleanest Energy State in U.S.

Rapidly Acidifying Arctic Ocean Threatens Fisheries

Parts of the Arctic Ocean within the next 10 years could reach levels of ocean acidification that would threaten the ability of marine animals to form shells, new research suggests.

Die-offs in such creatures could have ramifications up the food chain in some of the most productive fisheries in the world and provide a preview of what is in store for the rest of the world’s oceans down the road.

“The Arctic can be a great indicator” of future issues, oceanographer Jeremy Mathis, of the Pacific Marine Environmental Laboratory, said.

Ocean acidification is a process happening in tandem with the warming of the planet and is driven by the same human-caused increase of carbon dioxide in the atmosphere that is trapping excess heat. The oceans absorb much of that excess CO2, where it dissolves and reacts with water to form carbonic acid (also found in soda and seltzer).

As CO2 emissions have continued to grow, so has the amount of carbonic acid in the oceans, decreasing their pH. The ocean generally has a pH of 8.2, making it slightly basic (a neutral pH is 7, while anything above is basic and anything below is acidic). An ocean that is becoming less basic is a problem for the creatures like shellfish and coral that depend on specific ocean chemistry to have enough of the mineral calcium carbonate to make their hard shells and skeletons.

...

What’s happening in the Arctic now and what will come to pass over the next decade or two also show what will eventually happen in the rest of the oceans, especially if CO2 emissions continue unabated.

Read more at Rapidly Acidifying Arctic Ocean Threatens Fisheries

Die-offs in such creatures could have ramifications up the food chain in some of the most productive fisheries in the world and provide a preview of what is in store for the rest of the world’s oceans down the road.

“The Arctic can be a great indicator” of future issues, oceanographer Jeremy Mathis, of the Pacific Marine Environmental Laboratory, said.

Ocean acidification is a process happening in tandem with the warming of the planet and is driven by the same human-caused increase of carbon dioxide in the atmosphere that is trapping excess heat. The oceans absorb much of that excess CO2, where it dissolves and reacts with water to form carbonic acid (also found in soda and seltzer).

As CO2 emissions have continued to grow, so has the amount of carbonic acid in the oceans, decreasing their pH. The ocean generally has a pH of 8.2, making it slightly basic (a neutral pH is 7, while anything above is basic and anything below is acidic). An ocean that is becoming less basic is a problem for the creatures like shellfish and coral that depend on specific ocean chemistry to have enough of the mineral calcium carbonate to make their hard shells and skeletons.

...

What’s happening in the Arctic now and what will come to pass over the next decade or two also show what will eventually happen in the rest of the oceans, especially if CO2 emissions continue unabated.

Read more at Rapidly Acidifying Arctic Ocean Threatens Fisheries

A New Look for Nuclear Power: MIT News

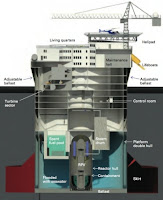

A nuclear power plant that will float eight or more miles out to sea promises to be safer, cheaper, and easier to deploy than today’s land-based plants.

Many experts cite nuclear power as a critical component of a low-carbon energy future. Nuclear plants are steady, reliable sources of large amounts of power; they run on inexpensive and abundant fuel; and they emit no carbon dioxide (CO2).

A novel nuclear power plant that will float eight or more miles out to sea promises to be safer, cheaper, and easier to deploy than today’s land-based plants. In a concept developed by MIT researchers, the floating plant combines two well-established technologies — a nuclear reactor and a deep-sea oil platform. It is built and decommissioned in a shipyard, saving time and money at both ends of its life. Once deployed, it is situated in a relatively deep water well away from coastal populations, linked to land only by an underwater power transmission line. At the specified depth, the seawater protects the plant from earthquakes and tsunamis and can serve as an infinite source of cooling water in case of emergency — no pumping needed. An analysis of potential markets has identified many sites worldwide with physical and economic conditions suitable for deployment of a floating plant.

“More than 70 new nuclear reactors are now under construction, but that’s not nearly enough to make a strong dent in CO2 emissions worldwide,” says Jacopo Buongiorno, professor of nuclear science and engineering (NSE) at MIT. “So the question is, why aren’t we building more?”

...

Buongiorno cites several challenges to this vision. First, while the fuel is cheap, building a nuclear plant is a long and expensive process often beset by delays and uncertainties. Second, siting any new power plant is difficult: land near sources of cooling water is valuable, and local objection to construction may be strenuous. And third, the public in several important countries has lost confidence in nuclear power. Many people still clearly remember the 2011 accident at the Fukushima nuclear complex in Japan, when an earthquake created a tsunami that inundated the facility. Power to the cooling pumps was cut, fuel in the reactor cores melted, radiation leaked out, and more than 100,000 people were evacuated from the region.

In light of such concerns, Buongiorno and his team — Michael Golay, professor of NSE; Neil Todreas, the KEPCO Professor of Nuclear Science and Engineering and Mechanical Engineering; and their NSE and mechanical engineering students — have been investigating a novel idea: mounting a conventional nuclear reactor on a floating platform similar to those used in offshore oil and gas drilling, and mooring it about 10 miles out to sea.

...

The researchers' vision for an Offshore Floating Nuclear Plant (OFNP, visible left and above) includes a main structure about 45 meters in diameter that will house a plant generating 300 megawatts of electricity. An alternative design for a 1,100-MW plant calls for a structure about 75 meters in diameter. In both cases, the structures include living quarters and helipads for transporting personnel — similar to offshore oil drilling platforms.

Read more at A New Look for Nuclear Power: MIT News

Many experts cite nuclear power as a critical component of a low-carbon energy future. Nuclear plants are steady, reliable sources of large amounts of power; they run on inexpensive and abundant fuel; and they emit no carbon dioxide (CO2).

A novel nuclear power plant that will float eight or more miles out to sea promises to be safer, cheaper, and easier to deploy than today’s land-based plants. In a concept developed by MIT researchers, the floating plant combines two well-established technologies — a nuclear reactor and a deep-sea oil platform. It is built and decommissioned in a shipyard, saving time and money at both ends of its life. Once deployed, it is situated in a relatively deep water well away from coastal populations, linked to land only by an underwater power transmission line. At the specified depth, the seawater protects the plant from earthquakes and tsunamis and can serve as an infinite source of cooling water in case of emergency — no pumping needed. An analysis of potential markets has identified many sites worldwide with physical and economic conditions suitable for deployment of a floating plant.

“More than 70 new nuclear reactors are now under construction, but that’s not nearly enough to make a strong dent in CO2 emissions worldwide,” says Jacopo Buongiorno, professor of nuclear science and engineering (NSE) at MIT. “So the question is, why aren’t we building more?”

...

Buongiorno cites several challenges to this vision. First, while the fuel is cheap, building a nuclear plant is a long and expensive process often beset by delays and uncertainties. Second, siting any new power plant is difficult: land near sources of cooling water is valuable, and local objection to construction may be strenuous. And third, the public in several important countries has lost confidence in nuclear power. Many people still clearly remember the 2011 accident at the Fukushima nuclear complex in Japan, when an earthquake created a tsunami that inundated the facility. Power to the cooling pumps was cut, fuel in the reactor cores melted, radiation leaked out, and more than 100,000 people were evacuated from the region.

In light of such concerns, Buongiorno and his team — Michael Golay, professor of NSE; Neil Todreas, the KEPCO Professor of Nuclear Science and Engineering and Mechanical Engineering; and their NSE and mechanical engineering students — have been investigating a novel idea: mounting a conventional nuclear reactor on a floating platform similar to those used in offshore oil and gas drilling, and mooring it about 10 miles out to sea.

...